This is the first article in a three part series on artificial neural networks.

You probably know plenty of technology buzzwords.

For example, there’s Big Data. Or Data Science. Don’t forget Machine Learning and Convergence. The list goes on.

But have you ever thought about why buzzwords are “a thing”?

Basically, words become buzzwords when we use them heavily during the first stage of the so-called excitation curve, which describes humans’ psychological relationship with new ideas.

The first stage is when we get excited about a new idea without necessarily understanding it completely. With time and better practical experience with the idea, we reach a tipping point where we decide that it may not be so great after all. And finally, when we fully understand its limitations, benefits, and practical use cases, we get comfortable with the idea.

The way many observers think about artificial neural networks (ANNs) is definitely following this pattern.

But I think the ANN is actually unique when it comes to buzzwords – and I’ll explain why in the last article of this 3-part series.

But first, in this article, I’ll cover some background. In the second article, I’ll explain where we are today with ANNs.

Let’s start with defining ANNs.

Artifical Neural Networks are computer systems physically designed to learn like the human brain.

The networks have artificial neurons that send signals amongst themselves with via artificial synapses. The strength of the neural signals and connections are weighted to become stronger as learning takes place.

The key feature of artificial neural networks is that they can learn from examples without specific programming each time. Therefore, the basic ANN use cases relate to:

-

Image recognition

-

Speech recognition

-

Text and speech translation

Modern applications are quite advanced, but the original concept goes back many years.

Artifical Neural Networks have been around since the mid-20th century.

The original goal back then was quite simple: make a computer learn like a human brain.

In the 1940’s, Alan Turing developed a machine with a simple algorithm that altered a sequence of ones and zeroes – written on tape at the time – based on the position on tape. Just a few years later, calculators were introduced.

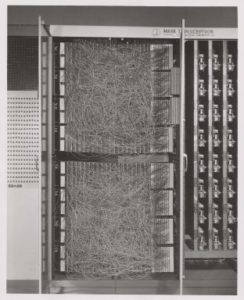

The perceptron, an image recognition algorithm, followed in the 1950’s. The first computer built around this concept looked like this:

As you can see, even the wiring was supposed to simulate the connections of neurons in the human brain.

A paper describing the perceptron’s shortcomings, particularly that it was effective only at solving simple problems, led to a drastic drop in interest in artificial neural networks in the 1960’s.

Therefore, it may seem like the artificial neural networks have already had their moment as a buzzword. By now, we should know where we stand with them and be familiar with their real-world capabilities, right?

In fact, better knowledge and modern computing capabilities have pushed artificial neural networks back into buzzword territory.