You’ve probably read dozens of theoretical articles on data lakes — what they are, the best practices for implementing one, and the best architectures to support it, to name a few. You need the experience and expertise to properly set one up.

We believe that you learn best by acquiring knowledge from practitioners. We’ve been developing data lake infrastructures and migration procedures for many years, and have learned practical lessons along the way. In this article, we’ll share our experiences and discuss ways of implementing data lakes in your company.

Here, we’ll focus on optimal architectures for data lakes, how we set up some of our own data lake solutions, and the advantages of our unique approach.

Optimal architecture for data lakes

A data lake is a storage of exceedingly large amounts of structured, semistructured, and unstructured data, like binary large objects (BLOBs), images, sounds, SQLs, and other databases. There’s no fixed limit in the size or file type in data lakes, as the data can be stored in native formats. The data structure does not have to be predefined before it is activated for querying or analysis. The design of the data lake handling system is thus crucial, as it can influence speed and performance.

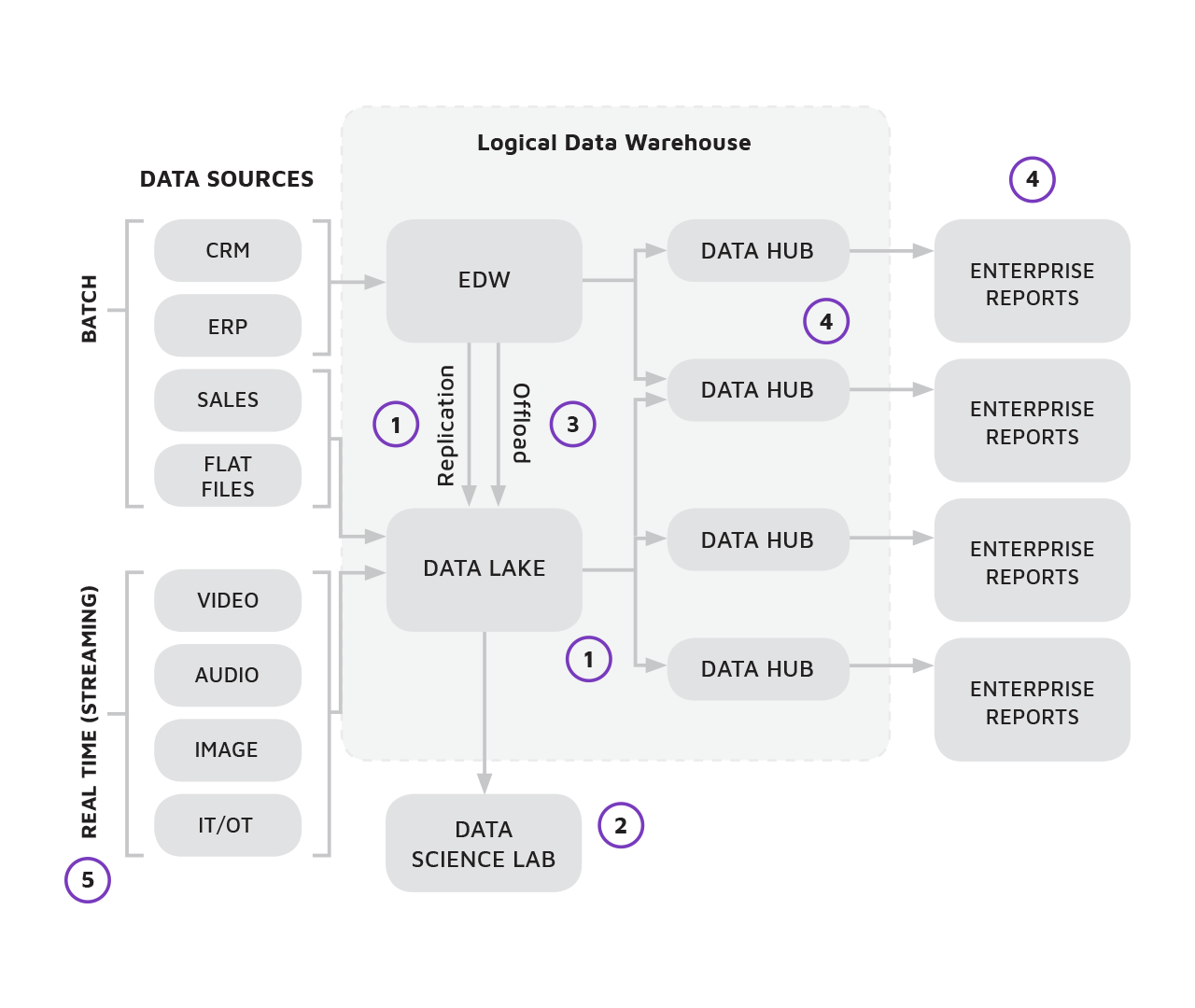

There are quite a few data lake architectures present on the market. We believe that the best way to implement a cloud-based data lake project is to initially undertake an adoption analysis. After developing a general strategy for data migration, such as moving from data warehouse to data lakes, you can then focus on developing a minimum viable product (MVP) and only then can you proceed to a more complex project, such as embracing the whole database (as shown below).

Adopting a unique model for data lake implementation

Here’s a business-oriented road map of a data lake-based development/migration system:

-

MVP. This satisfies critical business needs with sufficient functionalities. Its iterative development process allows for prompt feedback for further product update. Fast time to value is one of the key objectives and expectations.

-

Evolution. In this phase, new data types are added, and more focus is put in common understanding, consistency, and accuracy of data.

-

Expansion. Based on the learning experiences new enhancements and features are proposed and implemented. Work is focused on data lake use case expansion and further adoption at the same time making sure that settled users are not impacted by the changes.

-

Sunset. This phase gives the opportunity to phase out the legacy systems that were part of the transformation journey.

One of the most costly mistakes a C-level officer or executive may commit is to underestimate the size and scope of a data lake project and try to develop or migrate the whole system all at once. It’s good practice to adopt a step-by-step approach and create an MVP and then test it with your data before finally adopting the solution throughout the organization.

If you plan to tinker with the whole system at once, you risk disrupting your business’s reporting and other systems. Often, the data warehouse is not entirely moved to a new system but is instead partially and gradually migrated. It’s treated as a backup for the development of the new system. Such systems might even be a source of data for data lakes until the developer decides to slowly back out of the most cost-generating yet ineffective functionalities and repositories of data warehouses. It’s in this sense that data lakes broaden the analytical capabilities of data warehouses.

Some datasets present in data warehouses are better suited for data lakes. However, they were originally placed in data warehouses since there were no other options in the past. Traditional data warehouses, or enterprise data warehouses, together with data lakes (and other sources like streaming) comprise logical data warehouses.

Lingaro first focuses on consultations and aims to understand our partner’s needs. We work on a fast time to value for the initial MVP, and then do continuous work on the data lake solution and its services. After assessing the cost efficiency, we then implement the project and further customize it depending on the client’s needs. We’ve found that the most optimal goal is often a temporal coexistence between the data warehouse and data lake solutions, not a fast “lift-and-shift” migration to data lakes.

Lingaro’s architecture of data lake systems

Data and analytics companies might require accessing data using multiple systems or platforms. This might be time-consuming and quite costly. To drive more value faster from our client’s data, we often propose building a new, advanced infrastructure based on cloud data lakes.

Data warehouse-based solutions most often deliver siloed data, which limits the performance of analytical applications. This might result in the development of insufficient and trimmed shadow business intelligence (BI) solutions without a true overview of the company’s business performance. With these shadow and underperforming BI solutions, different company departments would not be able to integrate the data, which ultimately leads to a chaotic system with multiple versions of truth.

Even though data warehouses are still mainstream, they don’t allow for fast and efficient utilization of all new and old data sources. It may be difficult, for instance, to connect them to existing IT ecosystems and aggregate them with other data.

Data lake ecosystems, on the other hand, may alleviate these problems, as they combine multiple sources of data in one place and make them available for developers to build solutions or functionalities in one, unified toolset. This allows for fast and efficient decision-making based on updated and editable data. Lingaro has been adopting this approach in creating scalable, cloud-based, and platform-agnostic solutions in the form of technically challenging modules such as extract-transform-load (ETL) frameworks and master data management tools.

We also star with design workshops to establish a common ground, understand business needs, and determine the data patterns and solutions that must be implemented in the new system. We opt for cloud-based data lakes since they provide advanced and more efficient data analysis and clustering and in-memory processing. Cloud-based functionalities allow for flexible scalability and performance that is difficult to attain with on-premises solutions.

Additionally, we understand that an independent and efficient system requires a detailed documentation, so we always generate it. Documentation is necessary so that our client’s IT team remains independent and has a clear overview of the company’s data processing logic, major data flows, and the business logic behind them.

We carried out various cloud-based data solutions including a global-scale Microsoft Azure data deployment. Our solutions usually cover the following features and functionalities:

-

A central repository for all the company’s data assets, including crucial ones regarding point of sale (PoS), shipment analytics, and store execution measurement

-

Improved master data management quality with tiers that allow local master data control with comprehensive global reporting

-

Capabilities to upload and modify local reference data for key business users via a web interface

-

Reference data harmonization that expand data analysis capabilities across internally and externally produced data sets

-

Accelerated creation of data marts and BI applications

-

Scalability, flexibility, and optimal performance with a technology-agnostic platform

Some of the elements of a cloud-based data lake infrastructure that Lingaro implements

Cloud-based data lake: Benefits and use cases

A well-implemented cloud-based data lake system is easily available not only to IT specialists but also to other company employees and end users. This requires developing a toolset and interface that make crucial data and key performance indicators (KPIs) from different sources easily accessible in single view. This, in turn, supports faster, more advanced analysis and trend investigation, better high-level decisions, and quicker reactions to changing market trends.

Data lakes not only consolidate different data types into one repository. When built properly, they allow for the fast creation of new BI applications. This functionality is the first step in the process of developing an advanced analytics and global-scale data enterprise. The final system can combine filtered and secure data into regional data hubs and downstream applications. The data can then be updated and make reporting, extraction, and analysis available for business users in one toolset. The updated data can be accessed with BI tools via any device, like laptops, smartphones, and tablets. This makes the business decision-making process swift without technological bottlenecks.

Data lake-based solutions comprise a data ecosystem that’s not only simple and scalable, but also leads to long-term cost savings on infrastructure, hardware, and their maintenance.

We also have experience working with clients that have big data encompassing more than 25 terabytes (TB) for more than 200 business users. This kind of data ecosystem would be costly to maintain and support on an on-premises-based data warehouse where activating specific clusters for analysis and reporting is more optimal. After setting up a system handling the data, it can function as a central hub that’s easily operated by its business users.

Gartner’s recent report cautioned that companies will drown in data if they don’t devise a plan to deal with it. This means that IT professionals need to develop novel ways — like data lakes — to prevent issues relating to the vast volume of data in their organizations.