Quality assurance in IT projects is vital. Quality can encompass IT systems, applications, business processes, project management, and above all, data. Organizations need to embed quality for data and analytics projects that their teams deploy, implement, and maintain. After all, the quality of the data forms the foundation of any type of IT project.

Ensuring quality isn’t just an important element in IT projects. It has a critical impact on the business, too — so much so that poor data quality, for instance, costs organizations an average of US$12.9 million annually. In fact, 82% of surveyed senior data executives reported that issues in data quality are a barrier to data integration, which is groundwork for digital transformation.

Poor data quality can hinder the business’s initiatives toward digital transformation, exacerbate the complexity of its data ecosystems, lessen the accuracy of decision-making, adversely affect customer reputation, and even become a compliance risk. Indeed, the level or degree of quality can enhance or undermine the organization’s efforts toward data-driven decision-making — but what can affect data quality, beyond basic operational ones? Here, we’ll explore the aspects that directly affect or influence data quality — factors that have immediate, tangible impact on data quality.

Factors that directly affect data quality

My professional experience primarily involves working in the banking sector, i.e., financial institutions with stringent standards on data. Banks are institutions of public trust where we often accumulate savings throughout our lives, so we obviously expect absolute safety and reliability.

The observations, recommendations, and standards we’ve set here can apply to projects that expect rigorous quality in managing data. Some of them may seem too stringent for projects from other sectors or noncritical business processes, but it’s always worth aiming.

Identifying relevant data. More than ever, we’re surrounded by a huge amount of data. Are all these data points relevant in the project? Is the data reliable? We can read data from several different media and see that the same facts can be painted in different lights because they are saturated with opinion-forming content. So, what data should be considered in data and analytics projects?

This is where the role of a data analyst comes into play — a person who has the analytical skills and the ability to efficiently identify valuable information amidst a multitude of information. In the case of banking projects, distinguishing essential from irrelevant data might be tricky. Relying on knowledge or even intuition may cause that seemingly irrelevant data to be omitted in the data model, but might’ve been meaningful or valuable in practice.

But what does “valuable data” mean? In our experience, this would be data that is true, up-to-date, and objective (without evaluating components) in relation to our areas of our interest.

Understanding the source data. Once we’ve identified the relevant data sources, it’s necessary to take the appropriate approach for their analysis to understand their data properly and thoroughly. There are two must-haves for this: documentation describing the source data, and the data analyst's business knowledge.

Documentation of source data cannot be limited to a list of main entities described in single or even several sentences. The description should be twofold: something general to present — in a holistic manner — what the data relates to, and something detailed so that the individual fields can be understood. Ultimately, the design work goes down to the level of individual fields, which is why understanding them is essential. The description should precisely define their business meaning, granularity (what level the data applies to), and integrity constraints (e.g., data dependencies, allowed range of values).

Business knowledge is equally important. Even with the best documentation, a person who does not understand the terms used in each business field will not be able to accurately understand it. It might be possible to understand the technical aspects of data organization and the method of their storage, but they will not understand the business sense.

Managing and processing quality data

Equipped with good data that we understand thoroughly, we can now plan and implement the next stage: properly uploading data to the central database from which analyses can be conducted. The Extract, Transform, Load (ETL) process, especially in large organizations, is often a source of issues in data quality. Enterprises and large organizations such as financial institutions that operate in many countries have their data dispersed in different IT systems and geographic locations.

To obtain knowledge from the data that they have, they must collect this data in one place. This means developing processes that supply the central database with data from individual locations or units. The processes used to do this rely on reading the source data, transforming them so that they correspond to the data model in the target database, and loading them into this database.

Unfortunately, there are many misunderstandings in this area. For example, in the source system, there are three data points: A, B, and C, where C is calculated on basis of data points A and B. The ETL process in such situations also has an embedded algorithm for calculating C, although it is available in the source system. Unfortunately, the algorithm in the ETL process sometimes doesn’t correspond to the algorithm implemented in the source. This may be for various reasons, such as:

-

Variety of products in source systems. The algorithm can have a completely different shape for different products. While maintaining a dozen of products in source systems is doable, doing the same for thousands of products in ETL engines is a much greater challenge. In the ETL process, for instance, reading data from many sources and loading it into one system might involve reading data on thousands of different products, which can make the maintenance of such algorithms very difficult.

-

Algorithms changing over time. Even for the same product, the algorithm may change over time — either due to regulation, changes in product definition, or even the improvement of a technical error. The same changes are not implemented in the ETL process.

Designing a structure for data and testing for quality

It’s hard to expect an improvement on data quality from ETL processes but setting the goal of preventing deterioration in data quality in this stage is a reasonably realistic goal.

A properly designed structure of the target database requires the same factors — correct understanding of input data (i.e., documentation and business knowledge). Often misconstrued as an unnecessary cost, documentation is a very good investment because:

-

Errors in the solution can be detected even during the documentation process itself, which greatly reduces potential costs.

-

Documentation significantly helps in the implementation of new people in the project and the transfer of knowledge.

-

It is essential for teams working on this data.

Another good practice in managing data and analytics projects is central data naming. The documentation of source data, target data, and data at all stages of processing is a key factor in ensuring data quality. However, there might be situations where each author — even if well-intentioned — formulates the names of the fields according to their own ideas. Even if the documentation appears to be well-prepared, will it really fulfill its role? It won’t. It’s very important to name the same concepts in the same way throughout the organization. This has a remarkable effect on avoiding misunderstandings between business units or between individual stages of data processing.

Of course, there is also the most obvious in quality assurance: testing. It entails multiple types of tests, such as testing for data completeness, correct data relationships, and rational data distribution schemes from a statistical point of view.

Here, I mentioned two stages: the source data and the target structure of data. In real-life projects, processing is multistage. There should be verification performed at each stage, whether it’s checking that data has not been lost between the stages or verifying that quality has not suffered. When using testing tools, they should easily and flexibly enable:

-

Connection of tested data sources.

-

Implementation of new and update of existing validation rules.

-

Connection and update of dictionaries of allowed values.

-

Definition of the format of the resulting report.

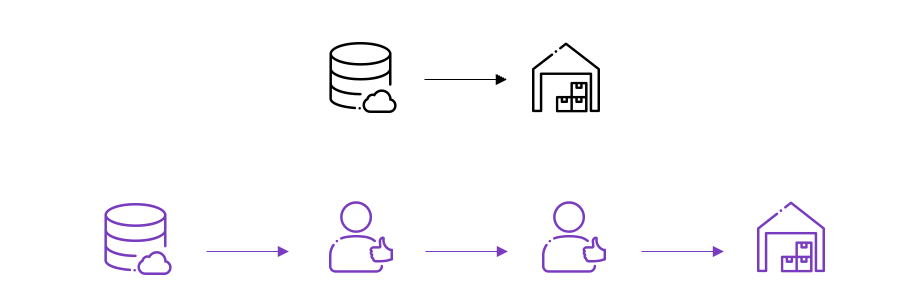

In large organizations, databases at individual stages of processing are managed by separate units. The rule for each data transfer should be in such a way that the sender verifies the correctness of the data before sending it. The recipient then verifies the correctness of the data before saving the data to the database.

A diagram visualizing the rule for each data transfer (on the left — verification before sending, on the right — verification before receiving)

An “acceptance criteria” should be established to clearly define the minimum data quality requirements that must be met. Otherwise, incoming data should not be entered into the database, but a discrepancy clarification process should take place earlier. It is good practice to define acceptance criteria at the earliest stages of the project, even within functional specification. Below is an example of a defined acceptance criteria:

| Dimension | Threshold |

| Completeness | 95% |

| Uniqueness | 100% |

| Validity |

98% |

Embedding quality to data management

Business decision-making will only be as accurate and forward-looking as the data it’s based on. Your teams can use the most state-of-the-art tools, but your efforts will only fall short on your targets without quality data and the expertise to thoroughly sift through vast amounts of data.

It’s not only important to have the technologies. It’s also about nurturing a data-driven culture that empowers the organization’s people to embed quality in the data that they access, use, and manage. This involves focusing on the meaningful and valuable data that sets a strong foundation for the organization's data and analytics projects, defining fit-for-purpose processes and using data assets, and embedding continuous improvement in the organization's culture. There are many factors that can influence quality in data, but a more fundamental factor is how to turn quality into a success factor in the organization’s journey to data transformation.

Lingaro Group works with Fortune 500 companies and global brands in adopting a multidimensional approach to standardize quality — from preparing, profiling, cleansing, and mapping to enriching data. Our experts work with decision-makers to advance their organization’s maturity in analytics and ensure that the data they use is accessible, unified, trusted, and secure. Lingaro also provides end-to-end data management solutions and consulting that cover the entire data life cycle and across different operating models, tiers, storages, and architectures.