The promise of generative AI is enticing — the ability to generate new content at a scale never before possible. With the cost of implementation and the challenges of working within API limitations, however, is it worth the investment for your business? Looking at its use cases unique within the enterprise can help gauge generative AI’s value to the business.

With the help of complex algorithms and machine learning techniques, generative AI can create brand new content, images, sounds, and even entire virtual worlds that have never existed before.

In fact, the utilization of AI has increased by more than two-fold in the past five years. Robotic process automation and computer vision, for instance, are the most deployed AI capabilities each year. The increased adoption and investment in AI are a testament to its potential to transform industries.

Microsoft and Google already use generative AI to bolster revenue performance in their cloud businesses. But how is it different from deploying solutions or tools powered by “traditional” AI — and how can enterprises use it to their advantage?

Limitations of generative AI and OpenAI

While generative AI’s advantages seem to be encompassing, it's still evolving and still has some limitations. For one, there’s the cost associated with fine-tuning and adapting the models to suit specific needs. This includes the cost of data transformation for prompts, as well as the cost of fine-tuning based on the company’s data. Training the models, too, is time-consuming.

In terms of API limitations, the official ChatGPT API for fine-tuning is not yet available as of this writing, and there may be limitations to accessing Azure OpenAI services.

There are growing pains, too. Releasing new versions of models may require changes to the API, and in some cases, model explainability, repeatability, and interpretability can be challenging. Before investing in generative AI, businesses should consider the expenses that could arise, such as significant computing power and cloud resources needed to train models, as illustrated below.

| Limit Name | Limit Value |

| OpenAI resources per region | 2 |

| Requests per minute per model* | Davinci-models (002 and later): 120 ChatGPT model (preview): 300 GPT-4 models (preview): 12 All other models: 300 |

| Tokens per minute per model* | Davinci-models (002 and later): 40,000 ChatGPT model: 120,000 All other models: 120,000 |

| Max fine-tuned model deployments* | 2 |

| Ability to deploy same model to multiple deployments | Not allowed |

| Total number of training jobs per resource | 100 |

| Max simultaneous running training jobs per resource | 1 |

| Max training jobs queued | 20 |

| Max Files per resource | 50 |

| Total size of all files per resource | 1 GB |

| Max training job time (job will fail if exceeded) | 720 hours |

| Max training job size (tokens in training file) x (# of epochs) | 2 billion |

Azure OpenAI Service quotas and limits (subject to change)

Source: Microsoft

To give you an idea, for every 1,000 tokens of prompt requests and completion responses, the GPT-4 API costs US$0.03 and US$0.06 respectively, while the ChatGPT API costs US$0.002 for both (1,000 tokens is equivalent to about 750 words). A bigger version of the GPT-4 API that costs US$0.06 and US$0.12 for every 1,000 tokens of prompt requests and completion responses would likely require a larger context length of 32,768 tokens. GPT-4 API can be more expensive by up to 29 times than ChatGPT API.

Investing in generative AI entails carefully considering the use cases, costs, and potential benefits, not to mention ethical concerns, such as biased data, that could arise from using this technology. If the benefits to your business far outweigh its limitations, here's an overview of how you can implement it.

.gif?width=1080&height=300&name=Approaches%20Gen%20AI%20animation%20GIF%20(1).gif)

Figure 1. The standard process for productizing a generative AI-based solution

Integrating generative AI in businesses

As visualized above, here’s an outline of steps on how businesses can successfully implement generative AI:

-

Identify specific business needs and use cases. Identify specific business problems that generative AI could address, including the outcomes you’re expecting and how you’ll measure them. Assess your business’s readiness for implementing generative AI by considering your available resources, budget, and technical expertise.

-

Choose the right type of generative AI. This depends on your use case, the type and quality of data that your organization has, and the resources you need to train and deploy the model.

-

Collect and preprocess data. Generative AI models require large amounts of high-quality training data. Ensure that the data is relevant, diverse, and representative of the real-world scenarios the model will encounter. Preprocessing steps include cleaning, normalization, augmentation, and other techniques to improve data quality.

-

Fine-tuning the model. This can be a time-consuming and resource-intensive process that would require powerful hardware and expertise in deep learning. You may need to experiment with different model architectures, hyperparameters, and training algorithms to optimize the model’s performance. In many cases, fine-tuning may not be necessary, but properly integrating resources is still required.

-

Integrate the model into business processes and data. The trained model needs to be integrated into business processes. This can involve deploying the model to a cloud-based service, building custom software to interface with the model, or integrating company documents and knowledge databases. You’ll also need to establish processes for data integration as well as data input, model output, and error handling.

-

Monitor and adjust the model over time. Generative AI models can be prone to errors and biases, and their performance can degrade over time as real-world data shifts and increases. Monitor the model’s performance regularly and adjust it as needed. This can involve retraining the model with new data, fine-tuning the model’s parameters, or implementing new error handling and monitoring processes. As the organization’s data grows, you’ll need to scale the model to accommodate it.

Prompts and prompt engineering

Typical AI-based solutions rely on rules that humans define to teach machines how to make decisions, while generative AI creates new data based on patterns found in existing data. When deploying a model in traditional AI, the system is trained to follow predefined rules. In generative AI, models are used to generate new content, so their deployment process is different. Both follow a similar productization process, but generative AI’s differs in prompt and prompt engineering.

In generative AI, prompts are like the building blocks that models use to create new content. To get the best results, prompts need to be carefully designed to meet high standards for quality, precision, and relevance. If a prompt is poorly constructed, the resulting content may be inaccurate or unhelpful. This is why prompt engineering is important for achieving high-performance natural language processing (NLP) applications that rely on the “prompt-response” model.

Prompt engineering is critical for developing top-notch language models that can perform a wide range of NLP tasks with great accuracy and efficiency. To engineer good prompts, several steps are involved, including:

-

Defining the task or goal.

-

Choosing the prompt format.

-

Designing the prompt text.

-

Testing and refining the prompt.

-

Repeating the process until a high-quality prompt is developed.

Prompt engineering can involve tweaking the wording and structure of the prompt to encourage the model to generate content that meets certain criteria, such as a certain level of creativity, or a specific style or tone. For example, ChatGPT allows its subscribers to fine-tune their prompts more precisely.

.png?width=1400&height=499&name=03%20Figma%2023%20Approaches%20Gen%20AI%20Part%201%20-%20diagram%20(2).png)

Figure 2. Sample visualization of how a chatbot integrates generative AI

Source: Microsoft

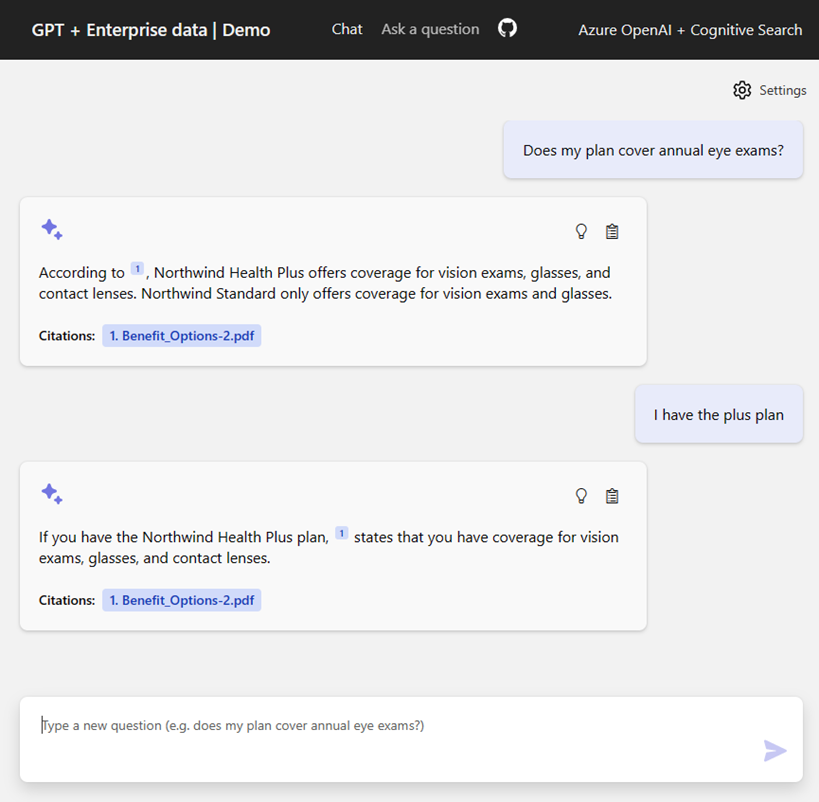

In Lingaro, for instance, we’ve had projects that included integrating company data with GPT/ChatGPT models using Azure services, such as Cognitive Search, which greatly enhances the model’s ability to generate relevant and accurate responses. As illustrated in Figure 2 (in Microsoft’s example, which we’ve applied), company documents, policies, and knowledge bases can be connected to the ChatGPT layer, which, in turn, can help the model generate more precise and tailored responses.

Figure 3. An example of how prompts can be used in a chatbot application

Source: Microsoft

Another example is a chatbot application. Prompts can be used to guide the conversation in a particular direction. The prompts could be based on specific questions or topics, or they could be designed to elicit a particular emotional response from the user. The chatbot could then use this information to generate responses that are tailored to the user's needs.

In this case, the chatbot employs prompts to steer the conversation toward fulfilling the user’s requirements. When it comes to enterprise-level applications, configuring these tailored prompts at a larger scale would necessitate proficiency in code development.

Implementing generative AI depending on use case

Generative AI boasts versatility, but its adoption in the enterprise should depend on your use case. For example, AI tools such as Stable Diffusion, Adobe Firefly, and DALL-E 2 can be advantageous for product designers and developers. Using one over the other depends on the use case or how it will be applied to the project.

DALL-E 2 have impressive capabilities, generating high-quality images with four times greater resolution than its predecessor. It offers impressive capabilities, and it can combine various concepts, attributes, and styles to create unique and innovative designs.

If you are a designer working on a budget, Stable Diffusion is a free and open-source tool to try out. It allows you to create textures, images, and animations based on either text descriptions or existing renders. This tool is conveniently available as a free and open-source plug-in designed for Blender and Unreal Engine, both of which are popular 3D modeling software.

On the other hand, Adobe Firefly is still in beta, but it offers a wide range of content types, including audio and video. It has partnered with organizations and made initiatives that creates a standard for ensuring the authenticity of generated art and content. It automatically attaches tags in embedded “Do Not Train” Content Credentials, which makes it easy to distinguish works generated by AI.

Beyond marketing and advertising, generative AI can also help you streamline your operations. For example, generative AI can generate computer code from data or natural language descriptions, such as Github Copilot, which can be used to automate software creation and maintenance tasks.

At Lingaro Group, we harness the potential of generative AI while still being mindful of its risks. This synergy means adopting an end-to-end approach. We help develop a long-term strategy that addresses the business's unique needs before preparing and transforming data as well as developing models and tools for deployment. We also employ guardrails when operationalizing them across the enterprise to ensure proper governance.

.png?width=725&height=725&name=image%20(1).png)