Generative AI derives its moniker from its capability to create new outputs such as images, texts, and audios based on the data they’ve been trained on. The recent breakthroughs are astonishing — from art, videos, programming, writing, gaming, and even biology and chemistry to astronomy — and are sparking passionate conversations and revolutions in almost every part of the business and people’s daily lives. How did we get here — and where are we going next?

AI has moved past the hype stage and is now part of our everyday lives. If you want proof of this, just ask your favorite voice assistant. As we walk closer to the horizon of technology, we come to find that there’s still plenty of things to discover ahead. Now, generative AI has come into view.

As of this writing, the tech giants are jockeying for pole position in this new AI race. Microsoft is backing OpenAI’s ChatGPT, an AI chatbot that uses a dialogue format to interact with a human user in an informal or conversational way. Google is playing catch-up with its own AI chatbot, Bard, but has unfortunately stumbled along the way. And naturally, smaller players are working to establish their niches:

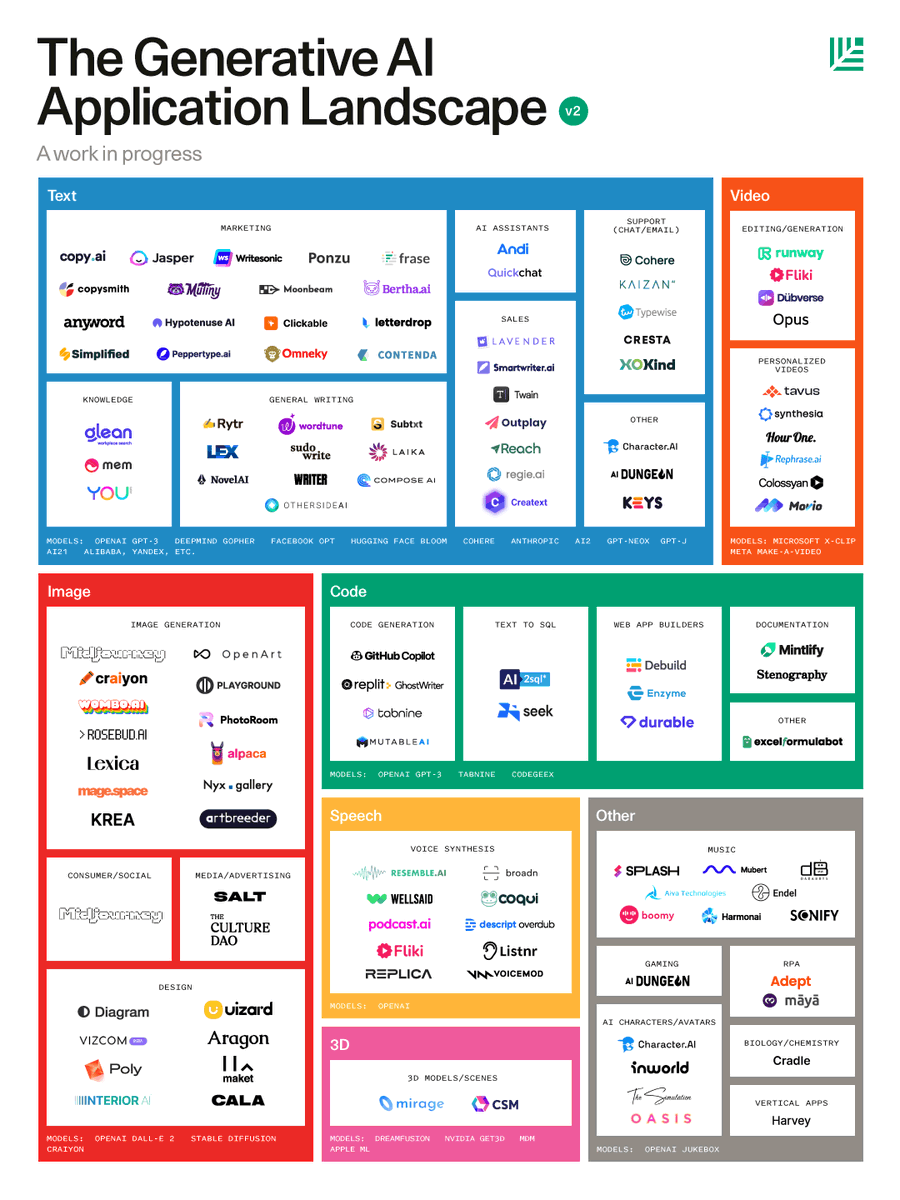

Figure 1. Products and services that use Generative AI

Figure 1. Products and services that use Generative AI

Image credit: Sonya Huang, Sequoia Capital

With so much happening in the field right now, it can be difficult to discern if generative AI is right for your business, much less know how to implement it properly.

How generative AI works

Unlike early AI that can only categorize and perceive things, generative AI can create new data instances that resemble the things it has categorized and perceived. That is, this new type of AI can create text, images, sounds, and videos that are based on real-world models. To illustrate, not only can generative AI recognize an elephant, but it can create a realistic or stylized image of one, too.

Let’s dive a bit deeper into this.

To understand how generative AI works, we must first have a grasp of concepts that underpin it. We’ll look at how machine learning models learn and how these models apply what they’ve learned to produce the desired outputs.

Supervised learning and discriminative modeling

The first machine learning models were taught by humans to classify data points into categories or labels. To illustrate, if we give a machine learning model images with enough visual information to convey the characteristics and features of a bicycle — and the images are labeled as “bicycles” — then that supervised model will be able to recognize bicycles from the new pictures, video clips, and video feeds we’ll give it.

.png?width=1200&height=614&name=Gen%20AI%20diagram%201%20(1).png)

If supervised learning is how a machine learning model is trained, then discriminative modeling is how the model applies what it has learned to discern the category or label a particular input belongs to.

Discriminative models can also be described as predictive. One way we can think about what “predictive” means is by visualizing a highly pixelated picture that is gradually becoming clearer. Sooner or later, we come to a point where we can guess with sufficient confidence (i.e., predict) what the picture would depict at high resolution. Let’s use a concrete example to illustrate this idea. The label “bicycle” has a distinguishing set of characteristics and features, so the more an image of an object has those characteristics and features, the more accurate the prediction becomes (that the object is a bicycle).

In addition to being able to discern the category or label of something, discriminative models can make fine distinctions between two or more items. For instance, the label “car” has its own set of characteristics and features, so a discriminative model can distinguish a car from a bicycle.

Discriminative modeling is iterative, meaning to say that the machine learning model’s predicted output is compared to the expected output. When the prediction is off by a certain degree, the model is retrained to increase the accuracy of its predictions. Here, human verification of outputs is important. We don’t want another case of an AI tool calling human beings “gorillas” or “primates.”

Unsupervised learning and generative modeling

In unsupervised or self-supervised learning, the machine learning model takes unlabeled datasets and figures out patterns and inherent structures within them — without human intervention. Unsupervised machine learning models are used to accomplish three tasks:

-

Cluster similar data points into groups. The machine learning model determines which category or group an item belongs to, based on similarities and differences found in training datasets. For example, an AI tool can take sales data of beauty products and create market segmentation based on the customers’ age, gender, and race.

-

Associate data points with one another. Given rules to find relationships between data points in a dataset, the learning model can specify what items can go with, affect, or be affected by other items. To illustrate, an AI tool can look at the items in an online shopper’s cart and generate product recommendations based on what other customers have bought together with those items.

-

Reduce dimensionality. When the number of dimensions or features in a dataset is unwieldy, the AI tool identifies which data inputs are integral so that it can slough off the rest. For instance, image and video autoencoders can take out film grain to enhance the quality of visuals.

Once trained, the model can become generative. That is, the model can use the patterns and structures it has found in a training dataset to create new data instances that are like what’s in that dataset. To illustrate, by having a generative model read tens of thousands of webpages, it can write how a sentence will end upon receiving a few words to start with. And if you provide a model with many pictures of bikes, it can learn what bikes look like in general and generate an image of a bike that’s not included in the pictures you provided.

.png?width=1200&height=607&name=Gen%20AI%20diagram%202%20(1).png)

Do note that although we describe the learning model as unsupervised, data analysts still need to validate the outputs it generates. For example, analysts will still need to see if a recommendation engine is correct to pitch cutlery items to shoppers who’ve put dinner plates in their baskets.

Types of generative models

Generative machine learning models have two different ways of creating novel data instances. The first is via generative adversarial networks (GANs), and the second is via generative pretrained transformers (GPTs).

Generative adversarial networks

GANs are a class of machine learning models that can create new sound recordings, videos, images, and texts. A GAN comprises two competing algorithms. The first is a generative algorithm that creates new and plausible data instances (such as images of bicycles based on a dataset full of pictures of bikes). The second algorithm, called a discriminator, checks the output against real data from any network architecture containing appropriate (i.e., comparable) data.

When training for the GAN commences, the generator often begins by submitting output that obviously looks fake. If the GAN is being trained to produce novel images of bikes, its first submission might barely resemble a bike. Thus, when the discriminator checks the output against images of real bikes, it tells the generator that it has failed and must try again. The process repeats, and, over time, the generator creates output that can trick the discriminator, so much so that the latter mistakenly classifies fake data as real more frequently. To illustrate, GANs can create images of people who don’t exist, yet discriminators and humans alike will say that they are images of real people.

Generative pretrained transformers

GPTs form a class of machine learning frameworks that don’t rely on the adversarial generator-discriminator relationship of GAN. Rather, a GPT depends on being trained on a particular task beforehand so that it is ready to perform a similar task in the future. To illustrate, if we train a framework to classify bikes and cars, we can save what it has learned so that it can detect bikes and cars (a similar but different task to classifying) later on. When that time comes, we won’t have to teach it how to recognize those things anymore.

GPT also utilizes a transformer, a neural network that can learn context — i.e., relationships in sequential data, such as the meanings assigned to words based on their short- and long-distance relationships with other words and punctuation in a sentence. To illustrate, GPT will know the difference between “John eats ham, BLT, and peanut butter and jelly sandwiches” and “John eats ham, BLT, peanut butter, and jelly sandwiches.”

In addition to text data, GPT can be used on other types of data, such as image and video data, for as long as the data is sequential, to generate all sorts of things. For instance, OpenAI created two GPT-based applications, namely ChatGPT and DALL-E-2. ChatGPT is a chatbot that can hold a chat conversation with a human user in an informal or conversational tone, while DALL-E can create images based on text descriptions.

Generative AI at Lingaro

We see the availability of large language models (LLMs) like GPT to produce good and reasonable semantic artifacts at a mass scale. Lingaro’s Intelligent Insights solution, for instance, provides content (e.g., summaries, guides, reports, comments) that can be personalized and presented in the right business context for increased comprehension and high adoption.

Using generative AI to craft a message out of raw insights, customizing it to the users’ contexts, and then delivering it to their points of consumption will allow our customers to spend more time on perfecting their business operations. The important factor is understanding the underlying architecture of the models. We see the importance of intelligent prompting from a security and governance standpoint, but we also recognize the potential of using the capabilities of LLMs to their full extent. While working on Intelligent Insights and using the transformative capabilities of generative AI, Lingaro focused on flexibility for the users to combine stories in forms that are both relevant (based on machine-learned understanding of user affinity) and potentially emotionally resonant for the person to whom the story is delivered.

We don’t see GPT-3 as a replacement of human intelligence, but rather a new way of interacting with the insights that are produced with our data services. It’s important to create a safe environment for the user. The primary application of models like GPT-3 will help us with automating small tasks like reconstructing missing parts of texts or completing them.

Hat tip to Jan Strzelecki for his inputs in this article