Generative AI has captured the fascination and imagination of artists, scientists, engineers, businesspeople, and everyday end users alike. Its ability to unlock new levels of innovation across the business, however, is as remarkable as its potential pitfalls. Businesses should be cautiously optimistic: They first need to identify parts of their business where generative AI could add value, create opportunity, and have the most impact. Before any of these could happen, businesses must get it past the many stumbling blocks ahead.

In the show, “Billions,” there was a scene in which a robotics manufacturer presented their humanoid robot’s walking (and door-punching) capabilities to captivate potential investors. A woman then laid down pens on the floor and challenged the robotics engineers to have the robot walk across the obstacle-laden surface without stumbling. The engineers backed off, afraid that their expensive prototype might fall and break. The investors lost interest, for obvious reasons.

What this illustrates is how new technology can be both fascinating and risky at the same time. It’s not hard to imagine the exhilaration the Wright brothers felt when they were trying to fly, and the adrenaline rush they felt while being afraid of crashing down. When it comes to generative AI — the latest innovation to come from the branch of artificial intelligence — it’s easy to get excited about what it promises to do for businesses.

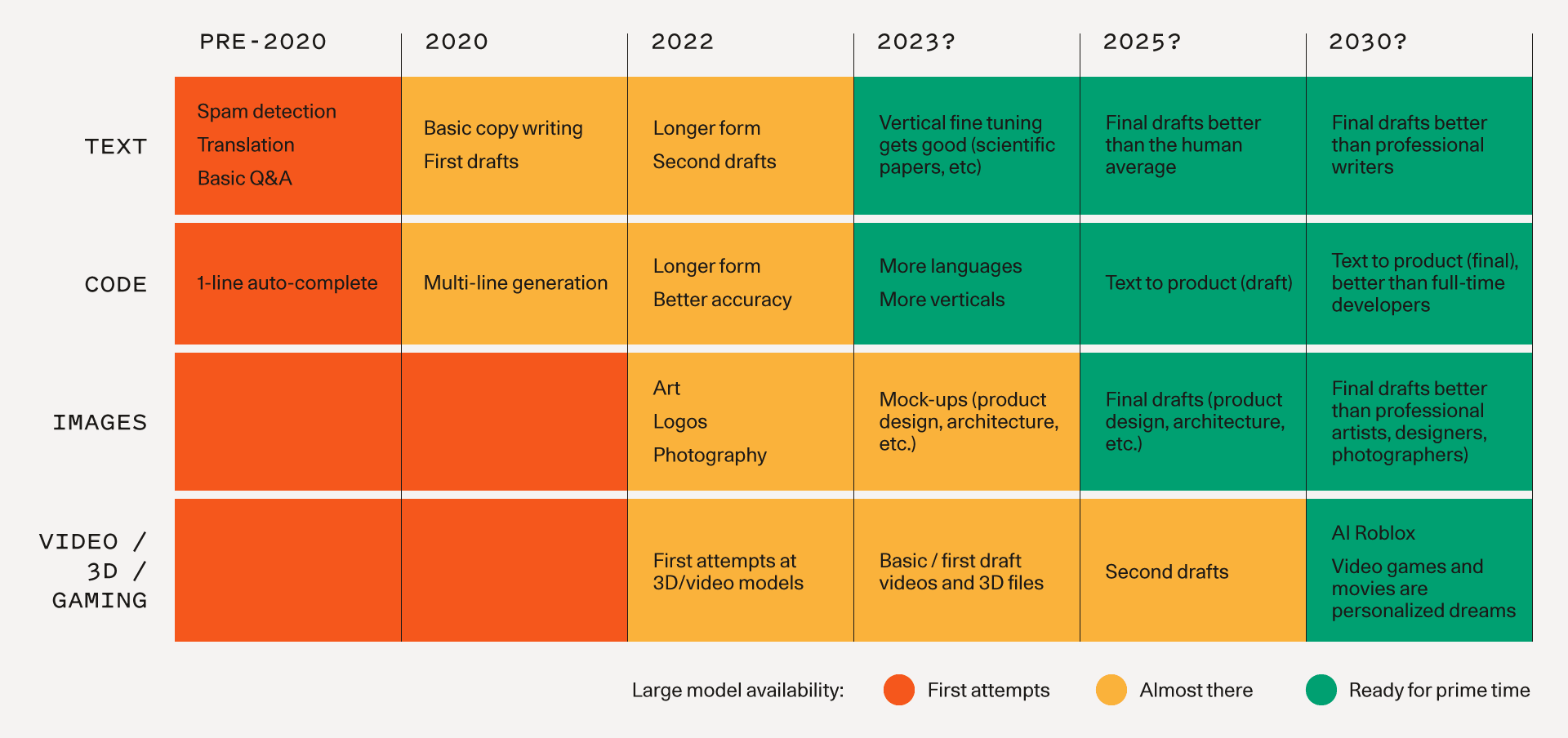

A sample trajectory of generative AI’s evolution over time

Image credit: Sequoia Capital

In our previous post, we looked at what individual and business users can do with generative AI, and focused on how AI can create content for knowledge management systems. At the moment, AI is being developed to have the ability to take on tasks like furnishing reports so that knowledge workers would be free to do more urgent or higher-value tasks.

However, as shown in the earlier table, creatives are projected to be replaced by intelligent automatons come 2030. Cutting their salaries and benefits from the expense column will surely result in thicker margins for businesses. And later, if AIs become better than humans in everything that we do, then perhaps we’ll come to a point where machines will do everything for us. They’ll grow our food, manufacture our stuff, treat our diseases. There would no longer be any need for humans to work, and humans might no longer need to use money, too. We’ll likely gain a lot of weight, just like the people in the movie, “Wall-E.”

That potential outcome sounds fascinating, but perhaps a little too far out into the future. Before the general population buys into the hype of generative AI, enterprises must first adopt the AI and help establish its value (just like what they did with face recognition and natural language processing). And before business owners and managers can do that, they must first think about getting past the stumbling blocks along the way.

Generative AI is not foolproof

A salad can only be as fresh as its ingredients. This metaphor illustrates how the quality of an AI’s output is dependent on the quality of the datasets used to train it. For example, OpenAI's ChatGPT, a chatbot that can respond to users in a conversational tone, uses a model in the GPT-3.5 series. That model finished training at the beginning of 2022, which means that it may be missing information from then to now. Therefore, it may provide inaccurate or outdated information to the users it chats with if it talks about matters that were not covered during training or have been updated since it was last trained.

Naturally, if the training datasets were full of gaps and inaccuracies to begin with, then the AI’s output will be highly inaccurate as well. Case in point: ChatGPT failed math and science tests given to Singaporean sixth graders last February 2023 (though it gave correct answers when asked the same test questions days later). Users must implement verification measures and not blindly accept what the AI provides.

Output quality might not meet expectations

When a user directs a generative AI tool to create outputs like blog posts and images, the AI might produce something that’s far from what that user had in mind. To illustrate, DALL-E 2 can create images based on user-submitted text descriptions. While impressive, it has trouble merging multiple properties like the shape, position, and color of an object. It can’t count over four, and it misunderstands words with multiple meanings. It can’t handle complex inputs — for instance, “a realistic image of a girl made out of Lego who is missing her right arm and is rebuilding it with her left hand.”

It also has no way of accounting for the user’s personal taste. When we prompted it to create an image of “binary code forming a face of a humanoid robot,” one of the images it produced did indeed fit the description... but it would get rejected if the user is not into dystopian sci-fi:

An output created by DALL-E 2 with the input, “binary code forming a face of a humanoid robot”

In other words, subjective factors like the user’s tastes and preferences will make an AI miss the user’s mark until the AI learns enough about what the user wants.

AI is vulnerable to biases

Anything that a human being is involved with will also be touched by that person’s experiences, viewpoints, beliefs, and biases. To illustrate, natural language processors first start processing the mother tongue of the programmers. In the same manner, text generators are first trained to write in the language that the programmers know. Dominance in technological advancements can carry with it cultural dominance as well. For example, navigation apps often fail to properly pronounce the names of streets in Asian cities when they only know how to use an American accent.

Another illustrative example lies in facial recognition software. Such software tends to recognize Caucasian faces the best when it’s built by white engineers and trained by looking at mostly white faces, and it recognizes Asian faces more accurately when it’s built by Asian companies. A facial recognition software used by law enforcers in the US, for instance, got mired in controversy when it was flagged to be racially biased. We can expect that what a generative AI tool produces will be significantly affected by the biases of those who programmed it.

To counter algorithmic biases — which can spread easily and quickly without proper governance — code must be built by a diverse set of individuals who can check one another’s blind spots, include fairness as a dimension of the systems being built, and establish creating equality as a goal to go with wealth generation.

Issues abound on governance

Generative AI has transformative capabilities, but it can also have a profound social impact. What is the right way of using generative AI, and how can we make sure that it is indeed used the right way?

The line between truth and falsehood is blurred

Turning textual and video accounts of Holocaust survivors into historical image renderings and interview-style AI-generated holograms helps future generations better connect to their stories. Deepfakes, however, can also misinform people convincingly, such as an AI-generated Barrack Obama saying things that the real Barrack Obama never said.

While there are currently no universal regulations or laws regarding deepfakes, some states in the US and proposed regulations in Europe have addressed the potential negative consequences of deepfakes.

In the US, California and Virginia have laws that criminalize the creation and distribution of deepfakes with malicious intent. In Europe, the European Commission has proposed regulations that would make it illegal to create and distribute deepfakes that are intended to harm individuals or undermine democratic processes. While regulations like the Digital Services Act are meant to police tech giants such as Amazon, Google, Meta, Twitter, and TikTok, it does not cover other data repositories, such as websites that host deepfake versions of explicit content.

Private data ceases to be private

There’s also the question of data privacy. If, for instance, a user enters personal and private data into an AI app, that data will likely be incorporated into its repository so that the app can learn from it. How can we trust that the AI app will keep that data to itself? For now, we can’t.

To illustrate, imagine using a generative AI writing tool like ChatGPT to improve documents containing confidential data like medical records, proprietary formulas, or yet-to-be-published creative works. Since the tool is programmed to learn continuously, it may keep your inputs and the outputs it provided and use these when creating output for other users. In short, the private data you submit to generative AI tools ceases to be private.

Even if you avoid using such AI-writing tools, these may still get sensitive information like your phone number, current address, and other personal data that you might’ve posted on the web, a renowned AI researcher explained.

This is because these new AIs use large language models (LLMs) that are trained on data gathered from the vast swaths of the internet — just like how researchers realized their personal details are known to ChatGPT and other AI-powered chatbots.

Ownership issues pose challenges

When a person tells an image generator to create an image, will that image belong to that person, or the makers of the image generator? And if that person told the AI to create an image in the style of a particular living artist, will that artist have any claim to the image produced by AI?

Image generators are trained using images that are readily available on the internet. Some generators can create artworks in the style of a particular artist. Those artworks can be uncannily indistinguishable from the artist’s actual paintings, like how experts brought to life a new Rembrandt painting. To modern, living artists, this may cause them to lose commissions if AI-generated art is chosen over their actual work. They may also take away patrons who want art pieces done quickly and inexpensively.

Many artists and illustrators are already calling out AI-powered art generators for using their artworks without consent, while visual media companies are sounding the alarm on copyright issues and challenges involving AI-generated content. On the other side of the fence, creators of generative AI tools, like Google, are more open to “rewarding high-quality content, however it is produced.”

How valuable or detrimental a tool is depends on how we use it. Rob Reich, a professor of political science at Stanford University, suggests that tech products ought to undergo a social assurance process so that tech companies and the society at large can check if the new products will have an adverse impact to society. Will we reach a point where we’ll have to enact laws to regulate the use of generative AI? Maybe we can ask the likes of ChatGPT for its thoughts on this matter.

Generative AI at Lingaro

We don’t see generative AI and large language models (LLMs) like GPT-3 as replacements for human intelligence, but rather as new ways of interacting with the insights that are produced by our data services. In fact, we use GPT-3 to power our Intelligent Insights solution, a tool that can craft content (e.g., summaries, guides, reports, comments) out of raw insights. Lingaro Group’s Intelligent Insights can personalize and present content in the right business context for increased comprehension and emotional resonance as well as deliver the content to the points of consumption of its recipients. When our customers use Intelligent Insights to do all those things, they’re able to spend more time on more creative, complex, and valuable endeavors.

As businesses seek to use big data for growth and innovation, tools fueled by powerful LLMs have become increasingly important. However, simply relying on preexisting algorithms and models can lead to inflexibility and governance concerns. We factored in all of these while working on Intelligent Insights. Composable design architectures provide a flexible and adaptable framework for integrating Intelligent Insights into existing data services. We also layered in security protocols and ethics frameworks to help ensure the safe and proper use of the tool. Ultimately, by utilizing Intelligent Insights and other generative AI-powered tools, businesses can stay ahead of the curve and unlock new possibilities for success.

Read more about generative AI: