Businesses face a pressing need to efficiently generate new outputs and outcomes, particularly when using generative AI. An end-to-end approach provides a holistic way to use its potential — from strategy, development, and deployment to maintenance — and minimizes the need to integrate multiple services that might be too disparate to seamlessly work together.

Generative AI enables end users to create and design new data, images, and even entire products that never existed before. Organizations trying to tap into its potential, however, might find it challenging to cut through the hype, what with its current limitations, the costs involved, the systems and infrastructures needed to implement it, and the technical expertise required to properly use it, to name a few.

We’ve been been working with enterprises and global brands in implementing generative AI to their organization. To address their needs, we developed an end-to-end approach that combines immediate and long-term strategies for addressing specific, multiple use cases, such as product design, image and text generation, fraud detection, and more.

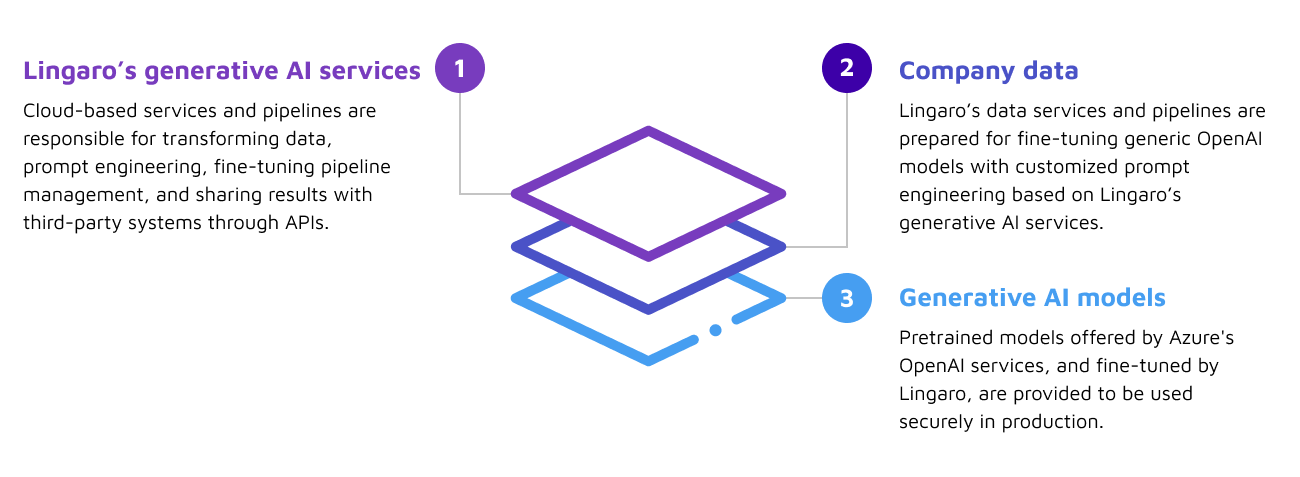

Figure 1. A visualization of the main components of Lingaro's end-to-end approach to generative AI

Lingaro’s end-to-end approach to generative AI

Lingaro's framework consists of three main components:

Lingaro generative AI services: These include cloud-based services and pipelines that handle data transformation, prompt engineering, pipeline management, and result sharing (via APIs). Essentially, these services enable Lingaro to take raw data from clients and transform it into a format that can be used by Generative AI models.

Integrating AI models into existing systems entail API management, particularly for integrating the likes of ChatGPT or GPT-4. Prompt engineering is also crucial, as the outputs of generative AI models depend on how the prompts (i.e., instructions/task) are defined, refined, and optimized for accuracy and relevance.

Company data: Prompt engineering requires data from which it will base the output from and thus needs to be integrated. For instance, a financial services company could use transaction data to train an AI model to generate personalized investment recommendations for customers, while a media company could use content data to generate new articles or video scripts. By using their own data to train generative AI models, companies can generate more accurate and relevant content, improve customer satisfaction, and increase efficiency.

Another example is using data gathered from specific business functions to offer AI tools fine-tuned for distinct applications, and to keep underlying algorithms free of extraneous data scraped online from unknown sources. Several firms such as Adobe, Intuit, and Workday are deploying generative AI language models in areas such as financial management, talent management, recruiting, financial, and other core software products and services.

Generative AI models: The Azure OpenAI Service have pretrained AI models that would need to be adapted to specific use cases, hyperparameters, or other specifications. It can be especially challenging for enterprise-level apps or solutions where the AI models are configured in a larger scale.

There are also other generative AI model providers, with the likes of Google, Meta, and Amazon racing to launch their own. There’s also StyleGAN2, a model that uses style transfer to create high-quality images of various objects, including faces and animals. Tacotron 2 is another model that can generate speech with natural-sounding prosody, pitch, and intonation — even for long and complex sentences — producing high-fidelity audio samples. Additionally, ERNIE is a set of pre-trained language models that can be utilized for various natural language processing (NLP) tasks such as text classification, named entity recognition, and sentiment analysis.

Each provider has its own strengths and limitations, and the models they offer are optimized for specific use cases. For example, StyleGAN2 is proficient at generating realistic images of faces, while ERNIE is more suitable for NLP tasks. It’s crucial to carefully consider these distinctions while choosing a model for your specific requirements.

-1-(Compressify.io).png?width=2108&height=862&name=MicrosoftTeams-image%20(7)-1-(Compressify.io).png)

Figure 2. An overview of how Lingaro delivers generative AI-based solutions

.png?width=3983&height=1727&name=MicrosoftTeams-image%20(6).png)

Figure 3. A sample workflow of how Lingaro implements generative AI

Data transformation, prompt engineering, and fine-tuning models

Figures 2 and 3 show examples of how we work on generative AI projects. An important aspect here is data transformation and validation. Despite their obvious importance, they are often overlooked in analytics projects. Neglecting these can lead to inaccurate and irrelevant outputs.

.gif?width=1200&height=361&name=Gen%20AI%20Approaches%20part%202%20animation%20GIF%20v6%20(1).gif)

Figure 4. A sample process of how data is transformed into output using generative AI

Data transformation involves converting raw data into a format that is structured and easy to analyze by generative AI models. It involves several steps, including cleaning, normalizing, and formatting the data. The goal is to ensure that the data is accurate, reliable, and relevant for training or fine-tuning.

Data validation, on the other hand, involves verifying the quality and accuracy of the data to ensure that it is suitable for use. This process includes checking for missing values, ensuring that the data is in the correct format, and validating that it aligns with the intended use case.

Another vital factor is prompt engineering, which involves creating a set of instructions or prompts that guide the model in generating new data or outputs. Fine-tuning the prompts to be as detailed as possible helps ensure that the model produces outputs that meet specific criteria, such as tone, style, or accuracy.

Prompt engineering significantly influences the accuracy and relevance of the generative AI model’s outputs. This process involves experimenting with different prompts and tweaking them based on the model's performance to optimize its accuracy and efficiency.

Fine-tuning services for GPT models is also key here. It involves training pretrained GPT models on client-specific data or prompts to improve the model's performance and accuracy. It also involves retraining it on a smaller, more specific dataset to adjust its parameters and improve its performance on a particular task.

Fine-tuning allows the AI models to be adapted to specific requirements without the need to start the development process from scratch. This not only saves a considerable amount of time and resources — it also enhances the precision and effectiveness of AI models. In this workflow, we manage the GPT pipelines and deployment. This involves setting up and managing pipelines for data transformation, prompt engineering, and GPT model training as well as deploying the GPT model in production environments.

Sample use case: Generative AI for an e-commerce company

Let’s imagine a company that wants to use a generative AI model to create product descriptions for its e-commerce site. Initially, they’d prepare the input data by collecting information on the products they sell and organizing it appropriately for the AI model's training.

After preparing the input data, the company trains the AI model based on this data. They might use a pretrained model, such as Open AI's Davinci, and fine-tune it to produce product descriptions specific to their company.

In this context, prompt engineering would involve developing prompts that provide information about the product’s features, benefits, and unique selling points. These prompts should be crafted in a way that allows the AI model to understand the context and purpose of the product description and generate a relevant and accurate output.

After fine-tuning the model, the company uses it to create product descriptions based on the input text. They then deploy the AI model and make it available/accessible through an API, allowing the generated product descriptions to be integrated with their e-commerce website. To ensure that the generated product descriptions remain accurate and relevant over time, the company needs to monitor the AI model’s performance and make necessary updates and improvements, especially when new products come along.

.gif?width=1200&height=361&name=Approaches%20Gen%20AI%20Part%202%20GIF%202%20(1).gif)

Figure 5. An example of how an e-commerce company can use generative AI for product management

Choosing the right generative AI platform

The choice of platform can significantly affect the performance, scalability, and cost-effectiveness of the model. Lingaro’s data science and AI teams can use the Azure OpenAI Service to apply large language models to various use cases. GPT-3 models, for instance, can be used to generate code from conversational language or integrated with Microsoft Power Apps. GPT-4 can be used to build more advanced virtual agents, AI assistants, and chatbots, and can even be integrated with Microsoft 365 Copilot to enrich Microsoft 365 apps (e.g., Word, Excel, PowerPoint, Outlook, Teams). To properly use these services, companies will need to manage data pipelines, train and fine-tune the models, and deploy them in production environments securely.

The Azure Open AI Service is widely known, but there are also other generative AI platforms that companies can also consider. There’s the Amazon Bedrock from Amazon Web Services (AWS) that enables access to large foundation models (FMs) via APIs. Bedrock, along with Amazon’s new AI toolkits, is touted be geared for more B2B application. Moreover, Bedrock can be integrated with Amazon SageMaker ML features such as Experiments, which allow for testing of various models, and Pipelines, which streamlines the process of building, training, and deploying machine learning models.

Another platform is Google Cloud’s Vertex AI, which now supports generative AI through Model Garden and Generative AI Studio. Model Garden provides a single managed environment to using FMs as well as open-source and third-party models. These models can then be fine-tuned and deployed in Generative AI Studio, which serve as a low-code platform for incorporating generative AI capabilities in applications. Generative AI Studio also offers an extensive range of features, such as a chat interface, prompt design and tuning, and the option to create bespoke models.

Choosing the right platform or service requires thoughtful consideration of its benefits and costs as well as the complexity of the project and the specific capabilities required. Each platform has its own strengths and weaknesses, so it's worth exploring each option to find one that best fits the business’s needs.

Lingaro Group understands the transformative power of generative AI and its potential to revolutionize how enterprises adopt AI. However, we also recognize the potential risks associated with this technology, and we take a holistic approach in using this innovation.

Our end-to-end approach involves collaborating with businesses to develop a comprehensive strategy that aligns unique immediate needs to their long-term vision of adopting generative AI. Our commitment to responsible AI means we implement guardrails that adheres to governance regulations and industry best practices.