In our previous post, we covered the challenges enterprises face when building machine learning models, how machine learning operations (MLOps) address those problems, and the new headaches that machine learning (ML) solutions create for data and platform teams. In this post, we’ll look at how Azure AI helps teams overcome MLOps challenges and make modeling an effective and efficient way of delivering value from business use cases.

MLOps streamlines the development and deployment of ML models. However, while MLOps makes undertaking ML projects more feasible and increases their chances of being productionized, implementing MLOps poses its own set of challenges:

-

Lack of MLOps talent: The demand for skilled professionals who can execute MLOps and create platforms from scratch far exceeds supply.

-

Need for a continuous model and data feedback loop: The amount of business value an ML model creates may decrease over time, and the enterprise wouldn’t be aware that they must act upon it.

-

Poor data quality and data management issues: MLOps can help reveal biased, uncleaned, or incomplete data used for training ML models, but managing data for modeling workflows could also prove to be problematic.

-

Lack of standards and best practices: Without any standards to adhere to and guidelines to follow, it’s difficult to ensure model quality and to reuse, replicate, and manage models.

-

High costs: Organizations must invest heavily in the right resources and dedicate significant amounts of time to make MLOps succeed.

One way to overcome these challenges is to facilitate MLOps with Azure AI and Azure ML.

What is Azure AI and Azure ML?

Azure AI is a portfolio of platforms and services tailored for AI Engineers and Data Scientists, enabling them to achieve more with fewer resources. Included in this portfolio is Azure Machine Learning (ML), a set of cloud services for accelerating and managing the machine learning project life cycle. Azure ML can make it easier for organizations to implement MLOps and deploy their ML models that create significant business value for them.

While there are other platforms that enable MLOps, such as Microsoft Fabric and Databricks, we’re focusing on Azure ML because of its comprehensive features and capabilities — what with Microsoft’s ventures and undertakings in AI and ML projects like ChatGPT and OpenAI — as well as its competitive pricing models.

Figure 1. A peek into Azure AI Studio’s user interface

Azure ML: Fundamentals and Key MLOps Features

Azure ML has many features that make implementing MLOps much easier and more cost-effective. Below are its fundamental features:

-

Registry: Contains code components, pipelines, data, feature, model, experiment, and environment registry with versioning capabilities

-

Data management of the shelf connectors, lineage, monitoring as well as ML specific data tooling like feature store, data labeling, model storage and monitoring.

-

Managed infrastructure: Provisions resources and assets (e.g., compute resources, interactive compute targets, or environments that are optimized for ML workloads)

-

Greatest number of processing power options to choose from, ranging from the cheapest CPU machines to an H100 supercomputer containing over 285,000 processor cores and 10,000 graphics cards

-

- Model management: Enables native management with MLFlow and out-of-the-box explainability and responsible AI dashboards, plus native integration with public model repositories like Hugging Face.

- Security: Includes network security, identity management, privileged access, data protection, logging and thread detection or endpoint security.

.jpg?width=1522&height=808&name=MLOps%20part%202%20-%20Components%20of%20Azure%20AI%20(1).jpg)

Figure 2. Components of Azure ML

Azure ML also has tools for accelerating software and model development and deployment:

-

Azure ML pipelines: Irons out issues in data transformation workflows and offers integrations with other services

-

Components registry: Makes components (i.e., self-contained pieces of code that each execute one step of a pipeline) available for reuse

-

Azure ML Python SDK v2: Allows users to build and run ML workflows using Azure ML, interact with Azure NL in a Python environment, and do the following:

-

Submit AI experiments to be tracked as well as training or scoring jobs

-

Manage data, models, and environments.

-

Perform managed inferencing (real time and batch).

-

Stitch together multiple tasks and production workflows using Azure Machine Learning (ML) pipelines.

-

-

Azure CLI v2: Enables users to manage Azure ML resources and use Python SDK functionalities like training and deploying models from the command line

-

Interactive executions/job experience: Allows users to do the following during an ML model’s training job:

-

Iterate on training scripts.

-

Monitor training progress remotely.

-

Debug the job remotely.

-

-

AutoML: Offers processes and methods for making ML accessible to nonexperts, automates many ML model-building tasks to increase efficiency, and accelerates ML research

Azure ML has tools for enabling model management and scaling:

-

Model registry: Enables users to store, version, share, and reuse registered ML models so that the copies could serve similar but different use cases

-

Managed online and batch endpoints: Frees users from having to set up and manage the underlying infrastructure of ML models by taking care of serving, scaling, monitoring, and securing their models

-

Responsible AI (RAI) dashboard: Enables users to abide by the six key principles of responsible AI:

-

Accountability: Those who design, develop, and deploy AI tools must be held responsible for everything those AI tools do.

-

Fairness: AI tools must not discriminate against or have any bias based on race, gender, religion, or sexual orientation.

-

Inclusiveness: AI developers must consider all human experiences and break down barriers that may exclude people from benefiting from AI.

-

Privacy and security: Data in an AI system must be protected so that no breach of personal privacy can occur.

-

Reliability and safety: AI systems must always perform as originally intended and resist efforts to manipulate it to act otherwise.

-

Transparency: Information regarding how AI tools work and how ML models were built and trained must be easily obtained to help users understand the data it generates and replicate the model as needed.

-

-

Scorecards: Compiles RAI ratings in shareable formats to be submitted for audits, compliance reports, and other corporate communications

Azure AI’s tools for monitoring and maintaining models include the following:

-

Azure Monitor: Lets users watch over and track the health and utilization metrics of Azure AI and other services

-

Environment build service: Allows users to choose among three environment types for building their ML model:

-

-

User-managed environments: Where the user is responsible for everything, from installations and configurations to dependency orchestrations

-

Curated environments: Premade environments that Azure provides so that users can immediately utilize various ML frameworks and deploy models more quickly

-

System-managed environments: Python environments that conda manages for users

-

-

Azure ML registry: Enables users to create and use models , environments, components, and datasets in different workspaces (located in different Azure regions) across the entire organization

-

Integrated security: Allows Azure AI to be configured to comply with the company’s data security policies.

What is an Azure ML pipeline and component registry?

Pipelines are software design patterns that structure logic into workflows and iron out the data transformation issues that occur in machine learning and data engineering. Pipelines are optimized for portability, reusability, and speed, and automatically orchestrate the dependencies between workflow steps. All of these allow MLOps engineers to focus on training ML models that address business use cases rather than on automation and infrastructure.

While pipelines can be created without using components (i.e., self-contained pieces of code that each execute one step of a pipeline), components grant pipelines with the highest degrees of flexibility and reusability. Registering components makes them available for reuse by their creators and by other staffers across the organization for their own projects.

Figure 3. Creating a machine learning pipeline with Azure ML (Source: learn.microsoft.com)

What exactly is an orchestration service, and does Azure ML fall into this category?

ML-powered tasks are accomplished by combining several steps into a complete workflow — from data transformation to model building and testing. Every step is developed individually and can be managed (i.e., configured, optimized, and automated) individually via different interfaces. As data moves through the workflow, each step transforms inputs into outputs, which become inputs for the next step to transform into other outputs. Dependencies are established this way. Therefore, linking the steps in a certain order or arrangement leads to managing the outcomes of the dependencies. This is called orchestration.

Creating such workflows with in-house tools and making the workflows have enough flexibility to integrate with cloud infrastructure are not easy tasks. Such difficulties make the workflows hard to scale and be utilized for training critical ML models. The Azure MLPipeline and command jobs are natively orchestrated and have schedules attached.

Another point is that Azure Machine Learning enables streamlined Docker image build service orchestration, simplifying the process of creating and managing containerized environments for machine learning workflows. This orchestration enhances reproducibility and scalability, empowering data scientists to efficiently build and deploy machine learning models in containerized environments. Hence the service holistically addresses these concerns.

Azure ML: The data scientist experience

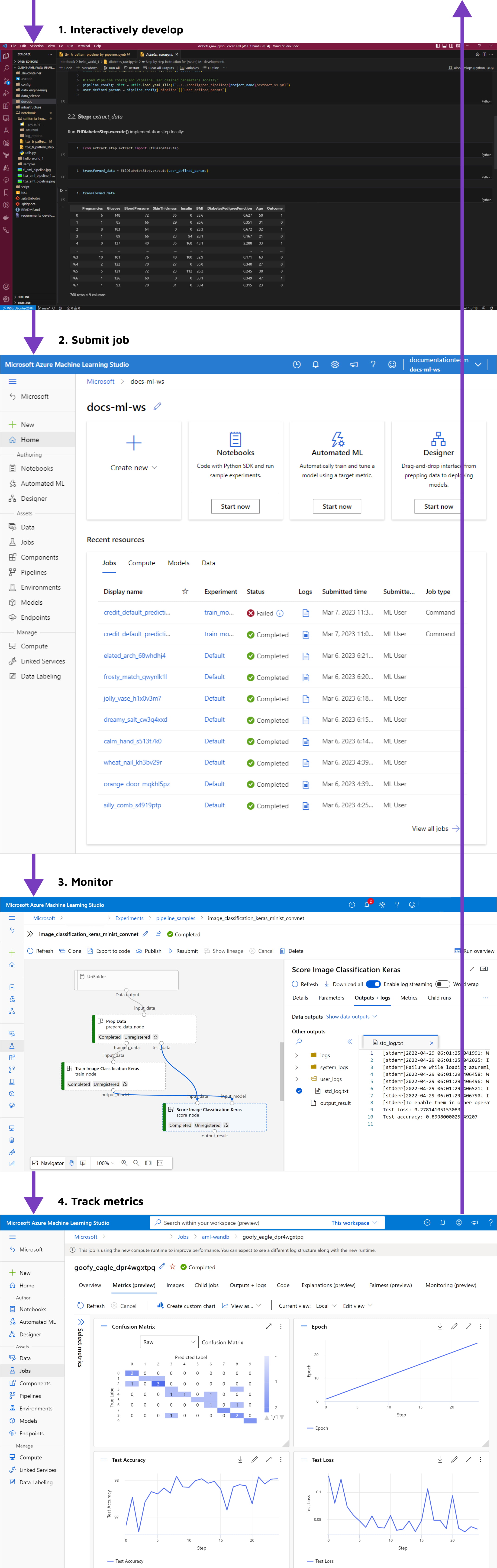

The following is a general workflow of development in Azure AI:

- Data scientists interactively develop in notebooks, either locally or utilizing powerful on-demand Azure AI compute instances if more RAM is required. During this stage, they can utilize ML pipelines to structure their code into a workflow, instead of mixing together all code elements, stages, and limiting to single compute type.

- When code is ready, the job is run on Azure AI compute, on specified infrastructure and with a set of dependencies within a code environment (docker container). There’s no need to configure the infrastructure — it is easy to request and is managed by the platform.

- Workspace view provides the ability to see running experiments and the data they use, access live logs, and check the status of pipeline steps.

- Metrics produced during the experiment can be logged within the platform, which provides a central space for comparing experiments, model results, and any kind of metrics. Azure AI contains a set of utilities to form graphs and bundle other information about the run as tags. The results of the experiment — model and associated artifacts — are stored in the model registry for further use.

Figure 4. A sample development workflow using Azure AI

How does Azure ML address MLOps implementation concerns?

Here’s how Azure ML can help organizations overcome challenges in implementing MLOps:

| Implementation challenge | How Azure AI helps |

| Lack of MLOps talent | Azure MLis a wide platform with many capabilities that enable even small IT teams to implement MLOps. To illustrate, there’s no need to invest in creating a platform from scratch in Azure ML. |

| Poor data quality and data management issues |

Azure MLprovides the following:

|

| Lack of standards and best practices | Standards are forged into tools and processes to make MLOps easier to implement with consistency, scale, efficiency, and crucial monitoring. With standardization, models and workflows are easier to reuse and manage. |

| High costs | Azure ML’s Studio capabilities are free, while the data and compute costs of other services have a pay-as-you-go payment scheme. |

While Azure ML is a powerful MLOps enabler that empowers users to implement MLOps best practices and ultimately create business value from ML models, it does not readily provide users with a continuous feedback loop that checks if the amount of business value produced remains consistent or decreases over time. Enterprises wouldn’t know they have a problem to fix until they’ve spent so much on maintaining their model and didn’t gain much from it in return.

In our next post, we’ll share how Lingaro’s approach to implementing MLOps in Azure ML addresses this problem, other significant benefits of using this approach, and use cases where the approach is most apt for businesses to implement.

.png?width=725&height=725&name=MLOps%20Part%202%20blog%20cover%201%20(1).png)