The growing adoption and investment in generative AI highlight its potential to revolutionize industries. From creating realistic virtual worlds for gaming and entertainment to assisting in design and creativity, generative AI is reshaping how businesses operate and deliver value to their customers — but how can it be used properly and responsibly?

While the advantages of generative AI are enticing, it’s important to recognize that the technology is still evolving and comes with certain limitations. The costs associated with fine-tuning and adapting models to specific needs as well as the time-consuming nature of training models are key considerations.

Limitations in API access and model explainability can also pose challenges. The potential expenses and computing resources required could also deter businesses from employing generative AI, or worse, make them use it haphazardly.

Integrating generative AI in businesses

Here is an overview of steps to take for implementing generative AI properly:

1. Identify specific business needs and use cases.

2. Choose the right type of generative AI.

3. Collect and preprocess data.

4. Fine-tune the model.

5. Integrate the model into business processes and data.

6. Monitor and adjust the model over time.

|

OpenAI resources per region per Azure subscription |

30 |

| Default quota per model and region (in tokens-per-minute) |

Text-Davinci-003: 120 K GPT-4: 20 K GPT-4-32K: 60 K All others: 240 K |

| Default DALL-E quota limits | 2 concurrent requests |

| Maximum prompt tokens per request | Varies per model. For more information, see Azure OpenAI Service models |

| Max fine-tuned model deployments | 2 |

| Total number of training jobs per resource | 100 |

| Max simultaneous running training jobs per resource | 1 |

| Max training jobs queued | 20 |

| Max Files per resource | 30 |

| Total size of all files per resource | 1 GB |

| Max training job time (job will fail if exceeded) | 720 hours |

| Max training job size (tokens in training file) x (# of epochs) | 2 billion |

Azure OpenAI Service quotas and limits (subject to change) according to Microsoft, as of July 28, 2023

Implementing generative AI using Azure OpenAI

Azure OpenAI Service is an ideal choice for getting started with generative AI. With its wide selection of models, enterprises have a variety of use cases: enhancing customer interactions, automating processes, and gaining valuable insights from unstructured data, to name a few.

GPT-4 model

GPT-4 is a set of models that builds upon the success of its predecessor, GPT-3.5. With its ability to understand and generate natural language and code, GPT-4 can tackle complex problems more accurately. GPT-4, for example, is optimized for use cases such as chat-based solutions or completion tasks.

Sample use case: Virtual assistant for customer support

The language comprehension and generation skills of GPT-4 make it ideal for crafting a virtual assistant geared toward customer support. GPT-4 can speed up development for customer dialogues and frequently asked questions as well as an AI conversationalist capable of tailored and standard replies.

GPT-3.5 model

Another model in the Azure OpenAI Service lineup is GPT-3.5. As an improved version of GPT-3, GPT-3.5 offers enhanced capabilities in understanding and generating natural language or code. Among the GPT-3.5 family, GPT-3.5 Turbo stands out as a capable and cost-effective choice.

Sample use case: Content generation for marketing campaigns

GPT-3.5 can be used to generate compelling content for marketing campaigns. With a brief or a set of keywords, GPT-3.5 can provide blog posts, social media captions, and email marketing copy that align with a prescribed brand voice and audience.

Embedding models in Azure OpenAI

Azure OpenAI Service also offers a range of embedding models that facilitate text similarity by converting text into a numerical vector. Among the embedding models, text-embedding-ada-002 (Version 2) is recommended for its improved performance and token limit updates.

Sample use case: Document similarity analysis for legal research

Embedding models, such as text-embedding-ada-002 (Version 2), can be immensely helpful in legal research. By converting legal documents into numerical vectors, organizations can perform similarity analysis to identify relevant cases, statutes, or precedents. This can significantly streamline the research process and save valuable time for legal professionals.

DALL-E (Preview)

DALL-E models, currently in preview, incorporates generative AI to image generation. These models can create original images based on text prompts. A sample use case would be artistic image generation for advertising campaigns, which can create images from descriptive prompts of desired visuals or concepts.

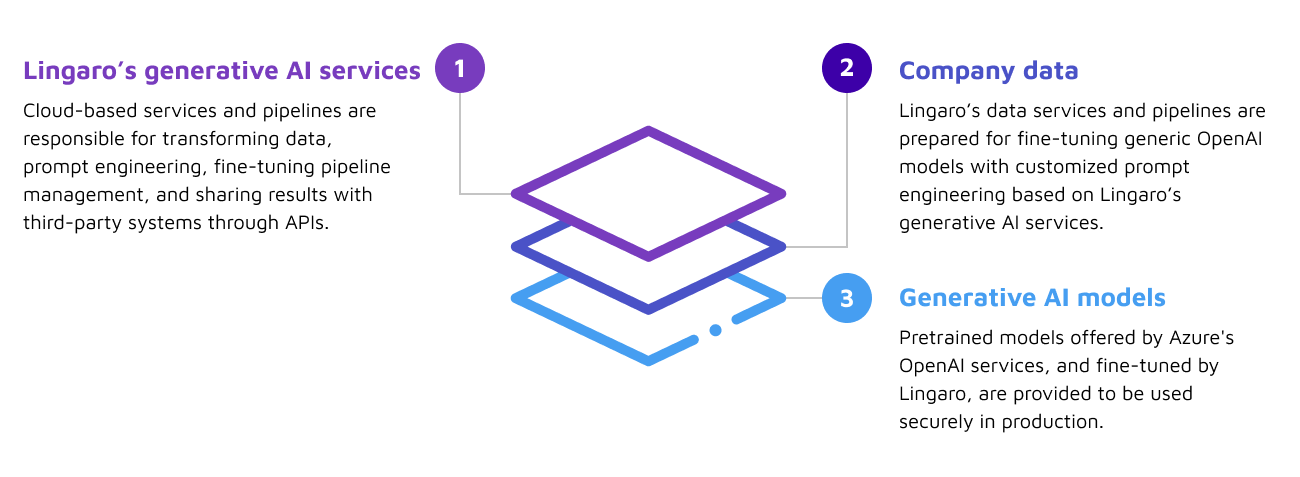

Figure 1. A visualization of the main components of Lingaro’s end-to-end approach to generative AI

Lingaro’s end-to-end approach to generative AI

Lingaro's end-to-end approach to generative AI consists of these components:

-

Lingaro generative AI services: Lingaro provides cloud-based services and pipelines that handle data transformation, prompt engineering, pipeline management, and result sharing through APIs. These services enable raw data to be prepared for use by generative AI models. API management and prompt engineering are crucial for integrating AI models into existing systems and optimizing the accuracy and relevance of the model outputs.

-

Company data: Prompt engineering relies on integrating relevant data from the client's own sources. For example, a financial services company can use transaction data to train an AI model for personalized investment recommendations, while a media company can use content data to generate articles or video scripts. By using their own data, companies can improve the accuracy and relevance of generative AI outputs, leading to better customer satisfaction and increased efficiency.

-

Generative AI models: Lingaro utilizes pretrained AI models from providers such as Azure OpenAI Service, Google, Meta, and Amazon. These models need to be adapted to specific use cases, hyperparameters, or other specifications. Different models have different strengths and limitations optimized for specific use cases. For example, StyleGAN2 excels at generating realistic images of faces, while ERNIE is more suitable for natural language processing (NLP) tasks. Choosing the right model is essential and requires careful consideration of these distinctions based on specific requirements.

Lingaro Group understands the transformative power of generative AI and its potential to revolutionize how enterprises adopt AI. However, we also recognize the potential risks associated with this technology, and we take a holistic approach in using this innovation.

Download our guide on implementing generative AI in the business, which covers:

-

Different approaches to implementation.

-

Current constraints of generative AI and OpenAI, including direct and associated costs.

-

An end-to-end approach to implementing generative AI, including a sample use case.

-

The importance of prompts and prompt engineering, data transformation, and the right generative AI platform.

.jpg?width=528&height=313&name=2097%20Blog%20Cover_%20Awareness%20Article%20221225%2010-(Compressify.io).jpg)