In an earlier post, we showed how Azure Machine Learning (ML) enables enterprises to implement AI solutions with high MLOps maturity to efficiently build and deploy ML models that address their particular use cases. Indeed, the versatile platform brings great value, but the value depends greatly on how efficiently it is utilized. To illustrate, Azure ML does not yet have a straightforward way of enabling users to maintain their domain-specific models and prevent phenomena such as data drift that diminish the models’ capacity to create value for the enterprise.

In business, resources like Azure ML might be greatly underutilized. To illustrate, a company might only use it to manage infrastructure and schedule jobs when they can also use its MLOps features for data exploration, validation, model monitoring, and hyperparameter optimization to help them develop and maintain their ML solutions. This is due to their lack of knowledge and expertise, which is why their ML projects often take too long to be launched into production, fail to deliver expected results, or — if they do provide initial value — are difficult to make them work in other, larger areas of the business.

With all of these in mind, Lingaro envisions itself as a data and AI solution-building partner that maximizes Azure ML to cocreate solutions that deliver the most business value possible.

Lingaro realizes this vision by:

-

Helping clients improve the time-to-market of their ML projects.

-

Ensuring that the clients’ business requirements turn into business value.

-

Scaling the ML solution effectively to whatever business area it is needed.

-

Eliminating production incidents.

-

Standardizing the ML process across the organization.

With industry-recognized and certified experts at Lingaro’s AI and Data Science practice, we’re confident in our ability to achieve all of these, thanks to the Lingaro MLOps Framework. It’s designed to provide substantial expertise in AI, ML, and Azure in enterprises as they strive to build efficient machine learning operations on the Azure platform.

In this article, we’ll focus on how the framework improves the time to market of ML projects, a few examples of architectures, and the importance of sustainability in AI solutions.

Lingaro's MLOps Framework improves time to market

Lingaro begins by first determining the steps clients need to take to empower their data teams to create a proof of concept (PoC) within the shortest possible timeframe. Let’s use demand forecasting as a PoC example. To build a demand forecasting ML model, the data science team needs to:

-

Define acceptance requirements for the model and system to be successful.

-

Identify data sources and run explanatory data analysis (EDA).

-

Explore various modeling techniques.

-

Evaluate the model based on the acceptance requirements.

A data scientist can set up a local machine to serve as a working environment, but this is insufficient because:

-

Data needs to be identified across various data sources, making it too large to be explored via one local machine.

-

A local machine has insufficient performance specs (g., disk, I/O, Network, RAM, CPU, GPU) to handle ML workflows.

-

ML projects require collaboration, and the results need to be replicable at any point in time.

-

Evaluations need to be tracked to understand the progress on the commit level and provide visibility to both reviewer and developer.

Given what a data science team needs to do with their limited staff and budget, Lingaro helps develop the PoC and turn it into a production-ready solution in a quick and repeatable fashion. For this purpose, we use Microsoft Azure ML as our foundational MLOps platform because, among others, it is one of the best platforms for MLOps features, provides a great workspace for PoC development, and offers robust data integrations to fulfill the tasks mentioned earlier.

Another important reason why we choose Azure ML is that we developed our very own MLOps framework to augment its capabilities and accelerate the setup process via three components:

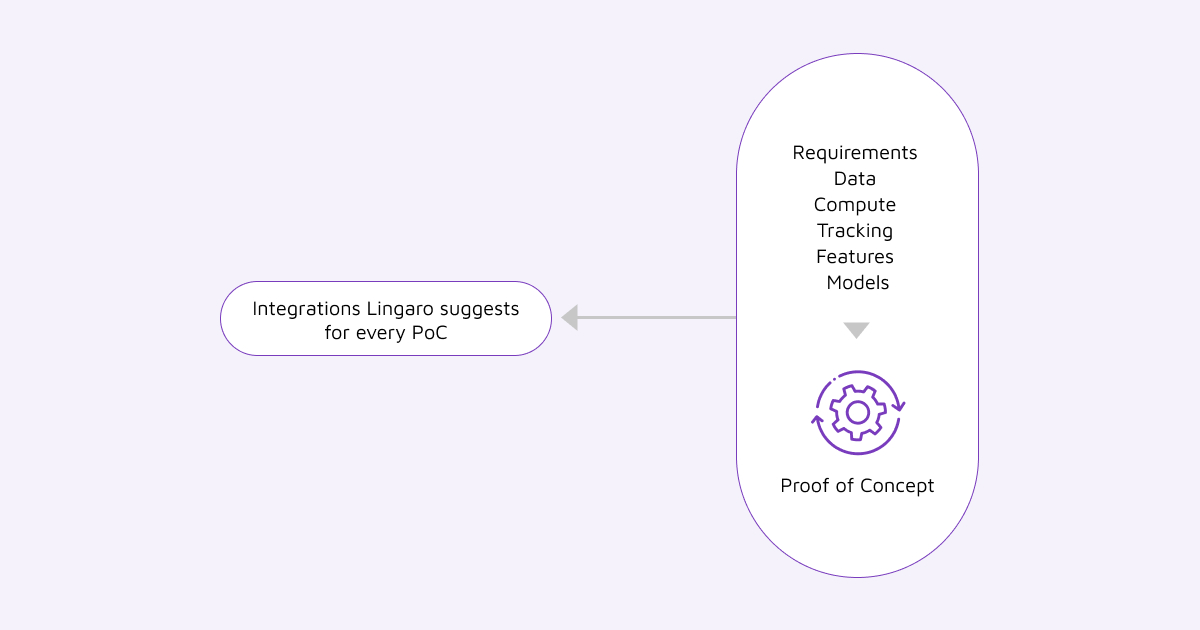

AI/ML workspace management platform: Lingaro uses infrastructure as a code (IaaC) for the creation and management of data science workspaces and central hub. With IaaC, once a team wants to start an ML project, we can automatically set up a workspace with machines and data connectors for them so that all they have to do is to connect remotely to start working. We then propose DevOps processes for continuous integration and continuous deployment (CI/CD) as well as introduce aspects of NoOps. Our framework seamlessly connects data science teams with infrastructure, data, features, and preexisting models (if any), enabling them to concentrate on addressing evolving business needs or KPIs.

Figure 1. Successful PoC development requires use case assessment to define business, data, model or system requirements. The fulfillment of these requirements then needs to be measured continuously.

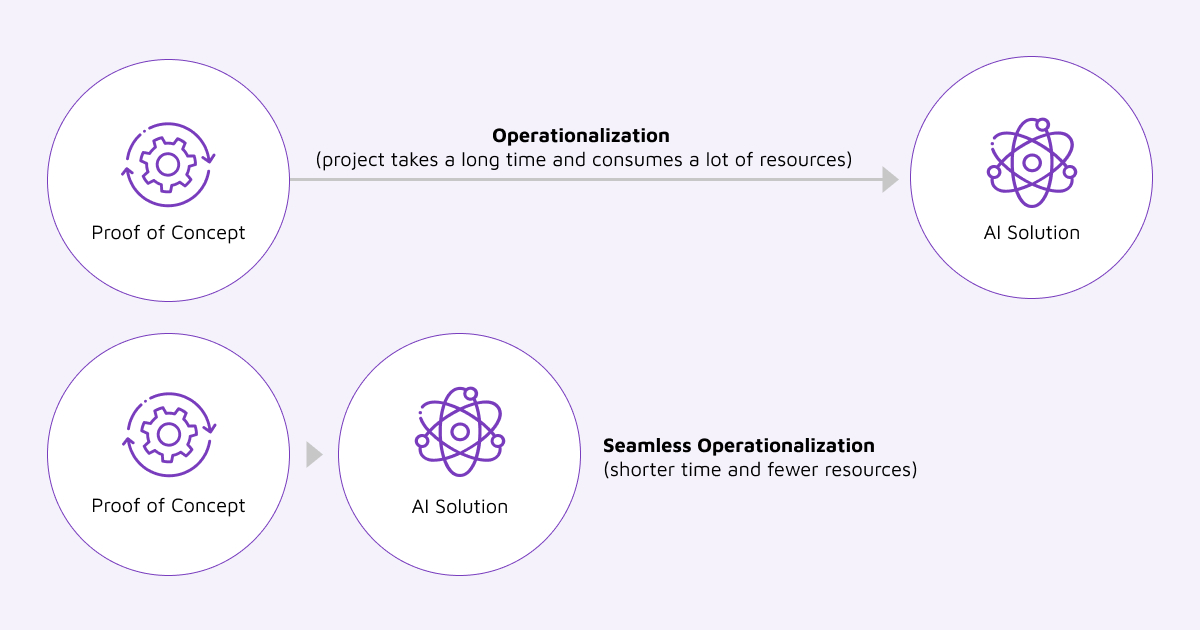

Production-ready development: We focus on providing development experience that minimizes the need to rewrite data science PoCs into enterprise-ready solutions. When AI experimentation follows a defined pattern, it can be efficiently transformed into a production-ready solution. That minimizes time to market significantly. The assets and processes used in our MLOps framework are standardized, which means that there is no need to refactor each PoC model. Data scientists can use templates to write code that can be used in the production right at the start and in a secure manner.

Figure 2. We enhance the way data scientists deliver PoC, increase its viability, and make it easily transferable to an enterprise-ready solution.

Lingaro pipeline patterns: Pipeline templates are prebuilt machine learning design patterns with a mindful design to build AI solutions more efficiently than if teams were to establish and implement MLOps requirements from scratch.

Lingaro’s pipeline patterns are used to optimize the hyperparameters of ML models and facilitate the training, validation, deployment, redeployment, and monitoring of models. The patterns can also be used to increase data engineering efficiency by facilitating the ingestion, transformation, and validation of data.

The patterns can be used for popular use cases as well as extended for new use cases. We bring use case expertise across different domains like predictive analytics, natural language processing (NLP), computer vision (CV) or recommendation systems.

Architecture examples for Lingaro’s MLOps framework

Now that some of the most important benefits of our MLOps framework have been laid out, we can take a look at the architectures we utilize in the framework.

Distributed machine learning for 70K+ classes (also known as the “many models” pattern)

-

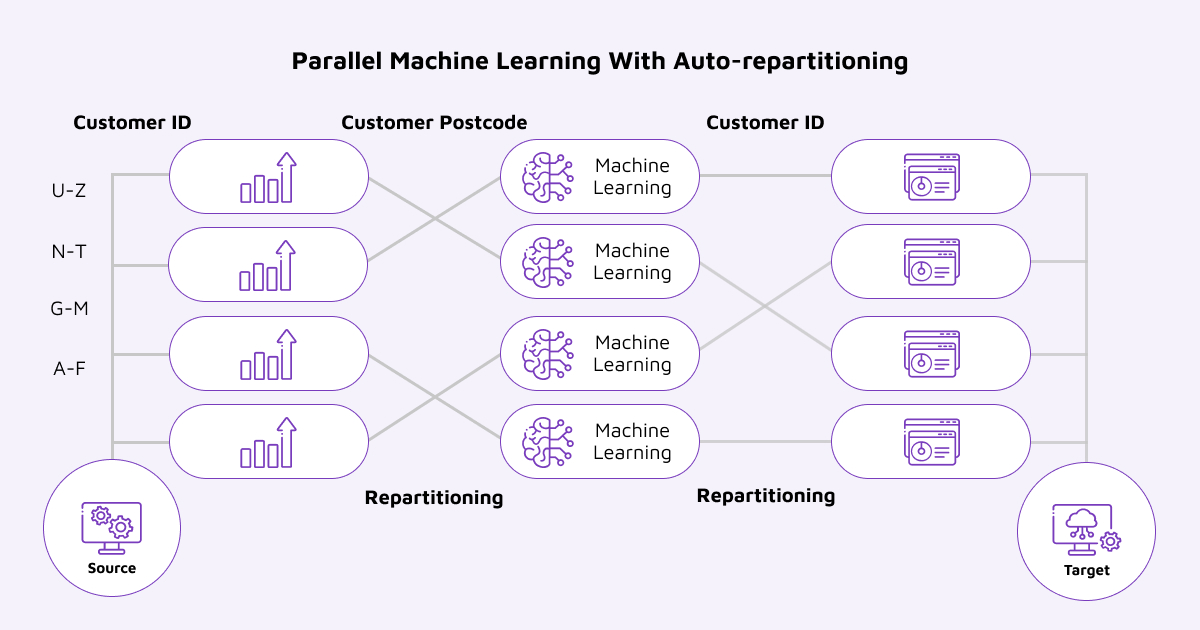

What it is: The many models architecture allows for multiple models to be trained in parallel and independently of one another. In each training instance, each model is given a subset of the training data (e.g., data divided by country or region).

-

Value added by Lingaro MLOps and data science services: Azure ML provides cost-effective parallel processing. To this, Lingaro brings MLOps maturity by allowing effective experimentation, change adoption, and metrics and KPI tracking as well as explainability per partition of data. Lingaro can also facilitate the configuration-based shift to Azure Databricks to enable larger-scale distributed training.

Figure 3. A technical diagram of distributed ML processes developed by Lingaro

-

Application: Lingaro’s distributed machine learning processes can be used to train or score models in parallel of one another. For example, these processes can predict sales volume for each product sold and for each sales location. This is especially useful when sales have many different patterns across products or locations that modeling aggregated data becomes ineffective.

Active learning pattern

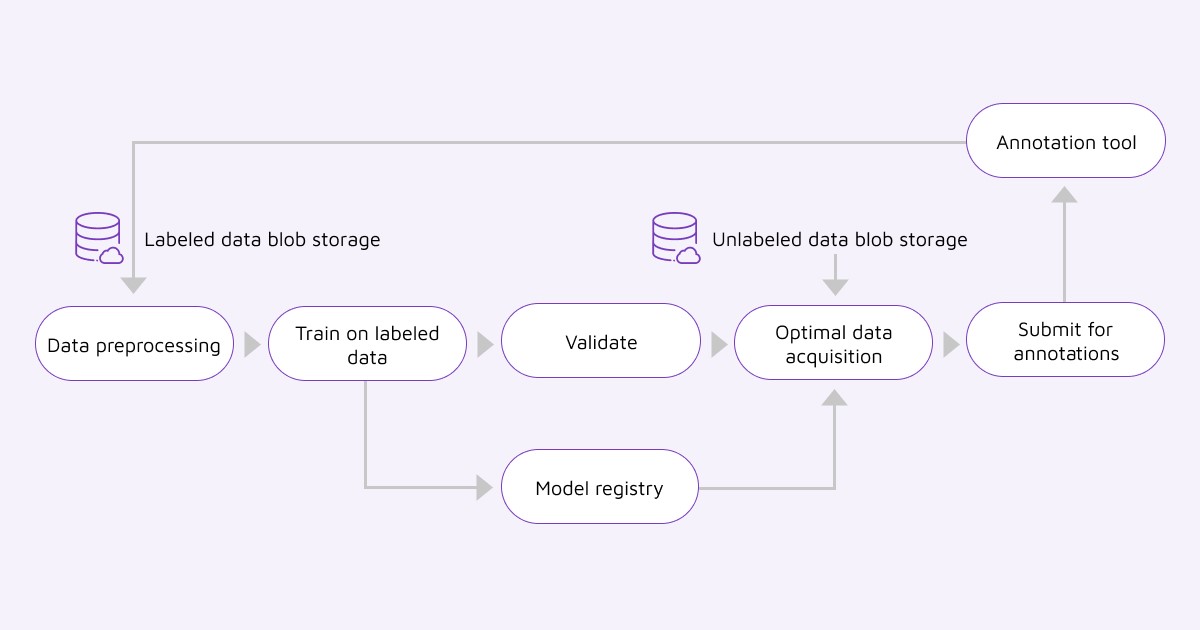

- What it is: Active learning pattern allows a machine learning process to learn from new labeled data points automatically and iteratively. Data annotation requires human input, such as a human person annotating textual data to identify sentences that relate to product quality. Active learning makes sure that that person uses this time in the most effective way by annotating the data in a manner that helps the model the most.

- Value added by Lingaro MLOps and data science services: We ensure adaptive and accurate predictions by validating and incorporating active learning into ML solutions and by iteratively refining models based on real-world user feedback or annotations. This dynamic process enhances model performance, enables the system to evolve with changing user preferences and data patterns, and makes annotations cost-effective. We integrate ML model life cycle with assisted labeling service with automated cycle iterations when new annotations are available.

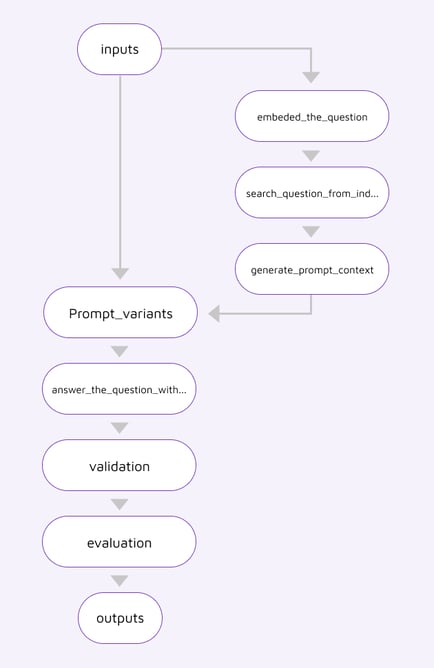

Figure 4. Lingaro’s active learning pipeline pattern

- Application: The active learning pattern, as augmented by Lingaro, can be used for measuring consumer satisfaction and needs as consumers react to changes to a seller’s product. Brands, products, outlets, competitors as well as key opinion leaders can all be factored into the model to discern the most efficient and effective marketing activities for that product.

Generative pretrained transformer (GPT)/large language model (LLM)

- What it is: This architecture features a generic generative AI model, such as OpenAI, coupled with Lingaro’s in-context learning and fine-tuning services. These services make the generative AI model more enterprise-ready.

- Value added by Lingaro MLOps and data science services: Lingaro offers generative AI services and pipelines for in-context learning/fine-tuning, data transformation, orchestration, prompt engineering, evaluation, and validation to improve factual accuracy, avoid hallucinations, and ensure secure deployment of a generative AI model in enterprise environments. Thanks to the context derived from many data sources within the company, the model provides more accurate answers and generates predictions and other responses that can be easily validated for correctness.

- Application: Use GPT models to create apps that are able to perform knowledge mining and management tasks (e.g., domain QA bots like a Teams GPT or IT support bot that explore company data), content generation (for example, creating marketing copy), report generation (such as deep analysis of IT issues), and provide insights based on company data and reports.

Figure 5. Example of a generative AI/LLM inference pipeline pattern

Lingaro’s MLOps framework makes developing AI solutions efficient and sustainable

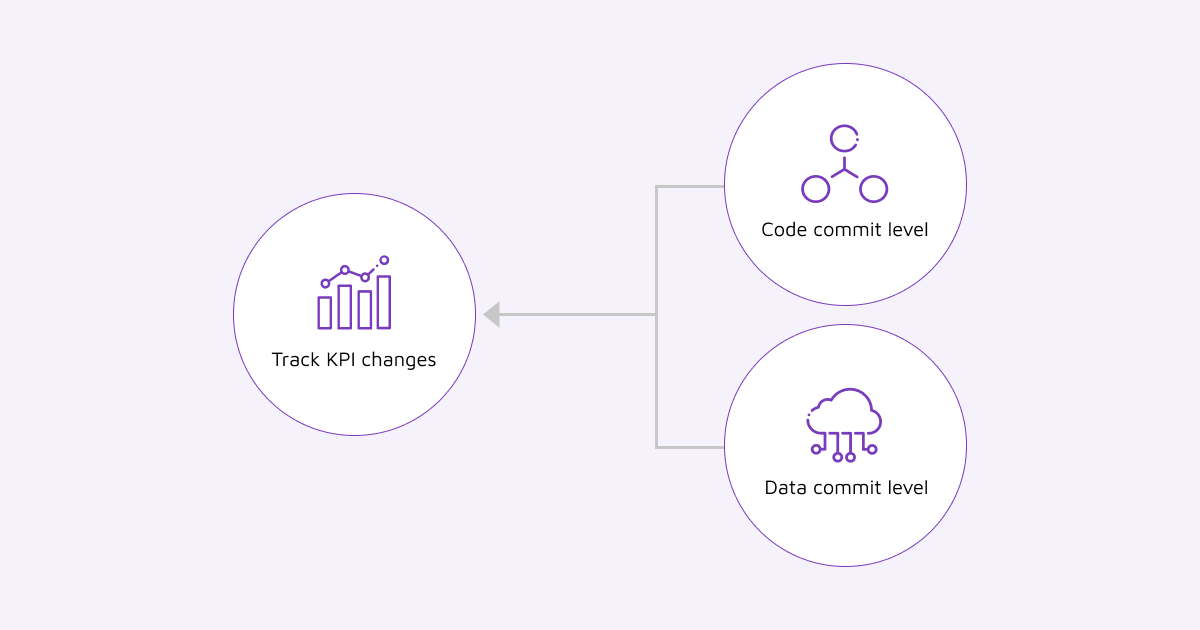

Solution evaluation and maintenance are essential components within the Lingaro MLOps Framework. The continuously changing data and the iterative nature of AI development are the fundamental aspects of an AI system. With this in mind, we center the project delivery around the continuous assessment of how the implemented solution aligns with the defined requirements and objectives. We maximize the value of CI/CD processes and Azure Log Analytics to understand what is affecting data, metrics and KPIs. These processes enable organizations to maintain the health and reliability of their ML pipelines and applications in a dynamic environment.

Figure 6. To understand business impact for any of the changes in the complex systems, it is important to track KPIs on code and data commit levels.

The framework as a whole can be implemented as an MLOps standard across the organization. Using the same steps and processes for tracking, data validation, and other MLOps tasks frees users from having to determine how to do things from scratch for every ML project. Not only does this improve time to market, but it also allows productized PoC models to scale with the business as it grows. Some other core technical concepts we adopted to streamline AI solutions are the following:

-

Reusable software assets, such as ML pipeline patterns

-

Data science code, patterns, and configuration separation

-

Local experimentation environment with notebook interface

-

Integration layer for platform deployment

-

DevOps CI/CD patterns

-

Continuous performance and quality reports/alerts

-

Data management patterns: Contracts, namespaces, layout lineage, versioning, rollbacks

-

Continuous validation of code, data, and model

-

Open-source MLflow model standard

-

MLflow-integrated collaboration, tracking, and asset management

-

Role-based development, network, and infrastructure security

-

IaaC setup

Adopting MLOps requires technical and business expertise

For enterprises, ML models add powerful capabilities that have the potential to create business value in the form of increased efficiency and productivity via automation, greater innovations through deeper business intelligence insights, and faster reactive and proactive actions via predictive analytics. To tap into these potentials, the organization needs to build, deploy, and maintain ML models.

To recap, we showed that building, deploying, and maintaining models is best accomplished via MLOps. We also demonstrated that implementing MLOps has its own set of challenges — and that Azure AI and Azure ML are currently the best tools for overcoming them. Lastly, we showed here how enterprises can maximize the utility of Azure ML via the Lingaro MLOps Framework, in order to create the most business value out of ML solutions.

Lingaro’s AI and Data Science practice helps enterprises determine their most critical business challenges, frame them as AI and ML use cases, and develop, operationalize, and scale solutions accordingly. Lingaro provides end-to-end MLOps as a service — from strategic consulting, rapid productization of ML solutions, and model monitoring to implementation of responsible AI.

Lingaro also maintains a dedicated center of excellence that brings together top talent, knowledge, and resources to holistically manage and scale AI projects and data life cycles. Our expertise and capabilities are complemented by industry-recognized advanced analytics practices built through XOps-inspired initiatives as well as DevOps- and Agile-based principles.

.png?width=725&height=725&name=MLOps%20Part%203%20-%20Blog%20Covers%20_%20Diagrams%201-(Compressify.io).png)

.jpg?width=750&height=240&name=MLOps%20Part%203%20-%20Blog%20Covers%20_%20Diagrams%203-(Compressify.io).jpg)